Engineering · 8 MIN READ · BRITTON MANAHAN · AUG 18, 2020 · TAGS: Alert / Cloud security / Framework / Get technical / Managed security

If you’ve read a post or two here on our EXE Blog, it shouldn’t come as a surprise that we’re big on automation.

That’s because we want to help analysts more efficiently, quickly and accurately determine if an alert requires additional investigation. Automating repetitive tasks not only establishes standards, it also removes the monotony and cognitive load in the decision making process. And we have the data to back that up. (Check out our blog post about how we used automation to improve the median time it takes to investigate and report suspicious login activity by 75 percent.)

Understanding why we do it might be easy.

But what about how we do it? Let’s dive into that.

We never automate until we fully understand the manual process. We do that through the Expel Workbench, which records all the activities an analyst undertakes when investigating an alert. We can inspect these activities, looking for common patterns and bring a data-driven focus to our automation.

In this post, we’ll dig into how we automated enrichments for Amazon Web Services (AWS) alerts.

Fun fact: this was the first task I was given when I started working at Expel. (Hey, I’m Britton, a senior detection and response analyst here at Expel.)

We’ll explore the logic deployed by our automated IAM AWS enrichments. Also, I’ll share our approach to developing AWS enrichments and the implementation of the enrichment workflow process.

You’ll walk away with some insights into particular AWS intricacies and how you can implement your own AWS enrichments.

Automating AWS IAM enrichments

When we create these new enrichments, we need to first define the questions that the associated user will need to ask when determining if an AWS alert is worthy of additional investigation.

Here are the questions we came up with:

- If the alert was generated from an assumed role, who assumed it?

- Did the source user assume any roles around the time of the alert?

- What AWS services has the user historically interacted with?

- Has the user performed any interesting activities recently?

- Has this activity happened for this user before?

All of these questions can be answered by investigating CloudTrail logs, which maintain a historical record of actions taken in an AWS account.

Expel collects, stores and indexes most CloudTrail logs for our AWS customers to support custom AWS alerting and have them readily available for querying to aid in triage and investigations. Note that we also collect, store and index GuardDuty logs for generating Expel alerts.

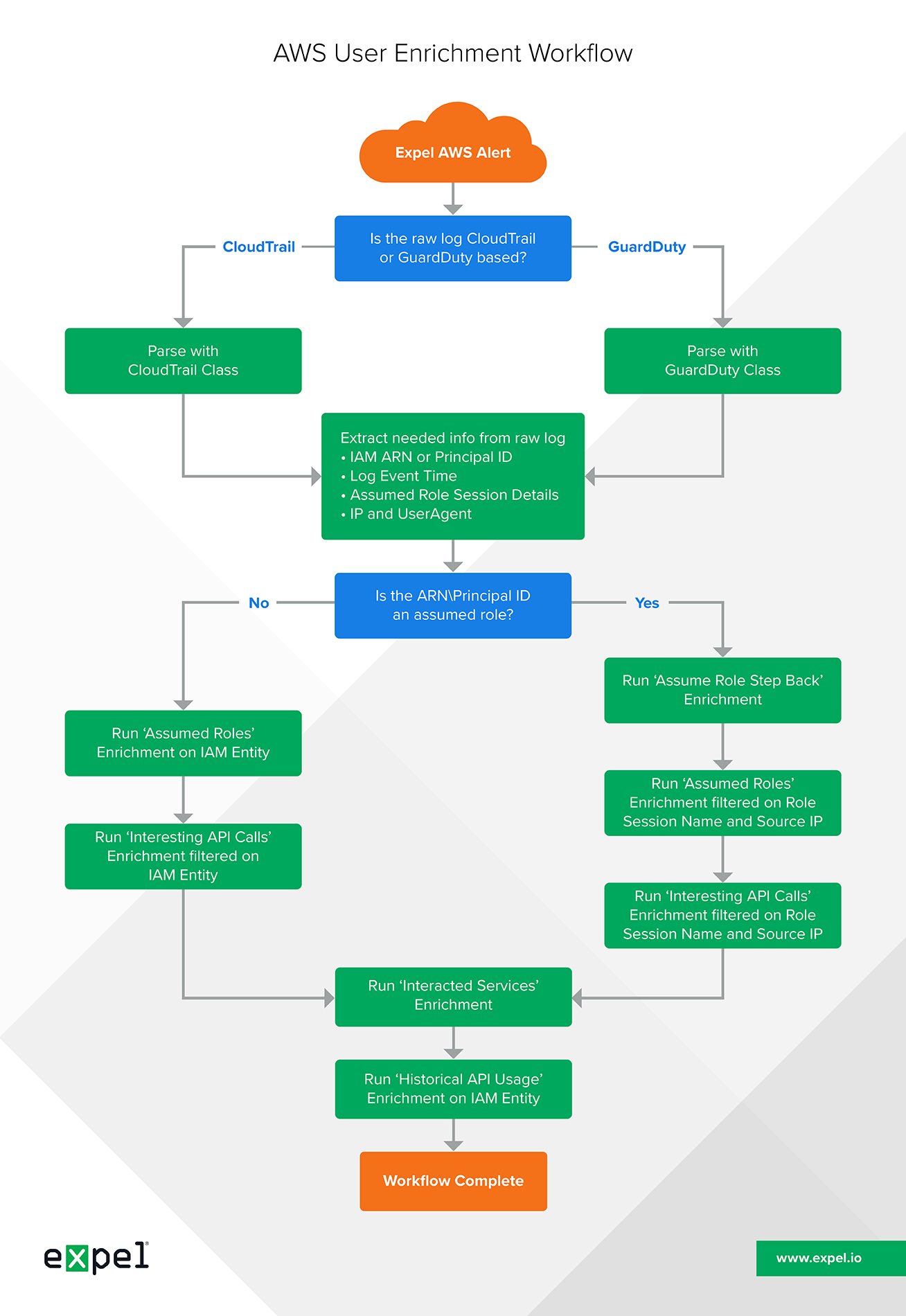

These questions all feed into an enrichment workflow (see the downloadable diagram at the end of this post) that helps our team make quick and smart decisions when it comes to triaging alerts. You’ll notice that this workflow supports AWS alerts for either CloudTrail or GuardDuty logs.

Now I’ll walk you through how we approach answering each of these questions and share my thoughts on what you should keep in mind when creating each enrichment.

If the alert IAM entity is an assumed role, what’s the assuming IAM entity?

If an AWS alert was generated by an assumed role, it’s important to know the IAM principal that assumed it (yes, role-chaining is a thing) and any relevant details related to the role assumption activity.

In the life cycle of an AWS compromise, a threat actor may gain access to and use several IAM users and roles. Roles are used in AWS to delegate and separate permissions and help support a least privilege security model. A threat actor’s access might begin with an initial user that is used to assume roles and execute privilege escalation in order to gain access to additional users and roles.

It’s critical, but not always simple, to know the full scope of IAM entities wielded by an attacker.

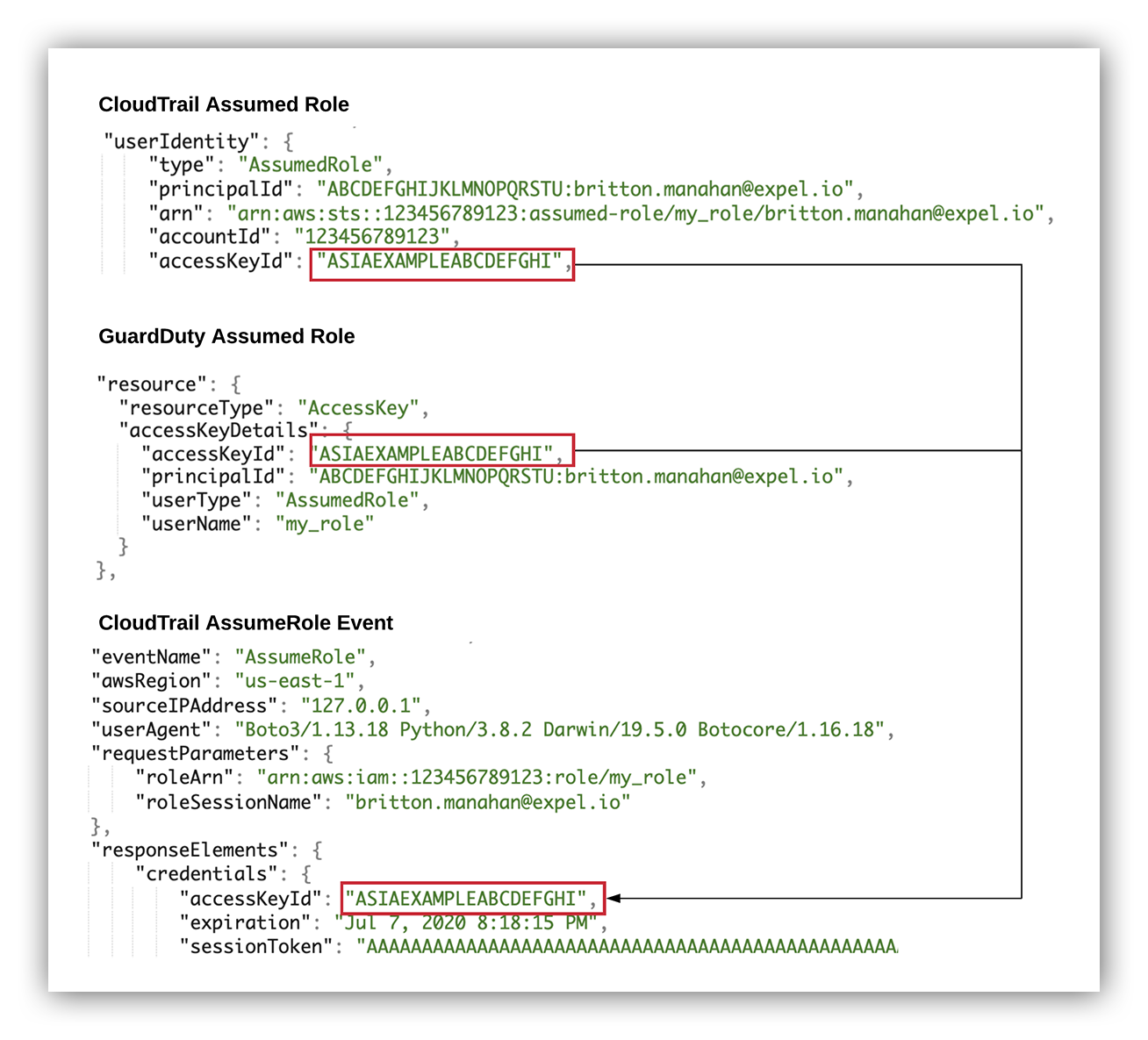

For command-line, software development kit (SDK) and SwitchRole activity in the Console, we can answer this question with the userIdentity section of CloudTrail logs which contain the IAM principal making the call, including the access key used.

When a role is assumed in AWS, temporary security credentials are granted in order to take on the role’s access permissions. CloudTrail logs generated from additional calls using the temporary credentials (the assumed role instance) will include this access key ID in the user identity section.

This allows us to link any actions taken by a particular assumed role instance and resolve the assuming IAM entity. The latter is done by finding the matching accessKeyId attribute in the responseElements section of the corresponding AssumeRole CloudTrail event log.

It’ll look something like this:

Matching AccessKeyId activity to its corresponding AssumeRole Event

Not so fast – there’s other use cases

While it would be great if this was all the logic required, there’s an additional use case for roles that needs to be considered: AWS SSO.

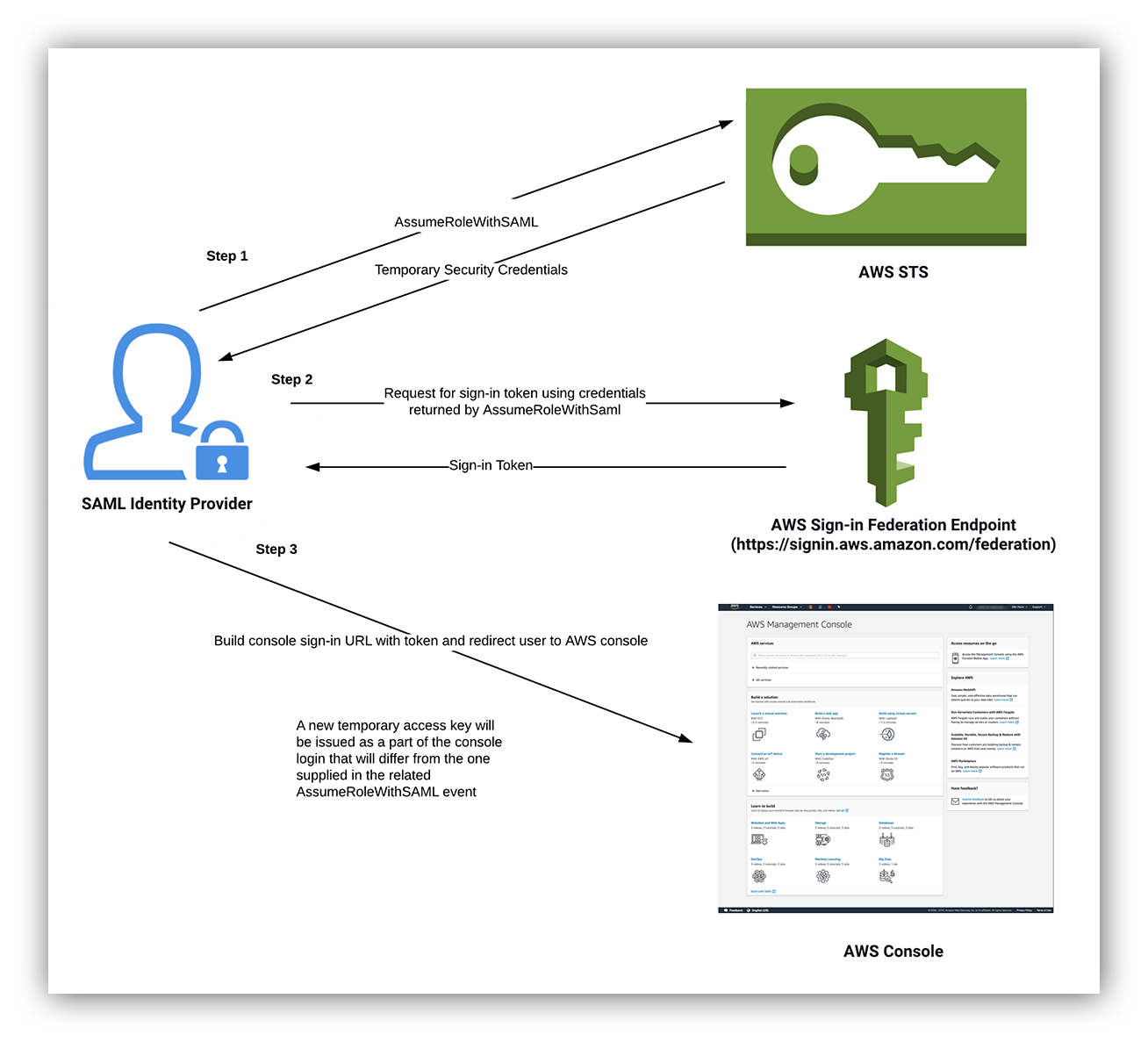

Federated users are able to assume roles assigned to them by their identity provider. This federated access system also provides the ability for federated users to login to the AWS Console as an assumed role. We often see assumed roles as the user identity for ConsoleLogin events when SSO is configured through SAML 2.0 Federation, but it can be performed using any of the AssumeRole* operations to support different federated access use-cases.

The following diagram provides a high-level summary of the steps involved in this process:

AssumeRoleWithSAML console login process

The reason this process is significant is that actions taken by the assumed role in the console will use a different access key than the one in the associated AssumeRoleWithSAML event.

Despite this, the corresponding AssumeRole event for this type of federated assumed role activity can still be located.

Evaluating returned events

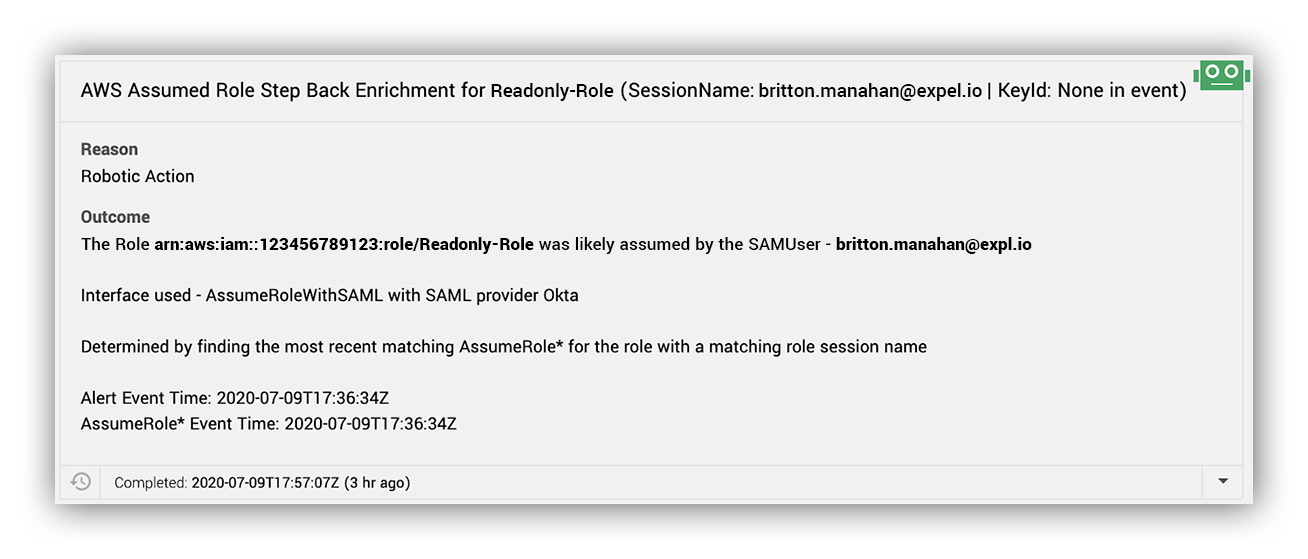

Following the logic shared in the AWS blog post, “How to Easily Identify Your Federated Users by Using AWS CloudTrail,” all recent AssumeRole* (that * is a wildcard) events for the role with a matching role session name are collected.

The returned events are then evaluated in the following order to determine the corresponding AssumeRole* log, with the matching logic provided to the user for clarity:

- If one is available in the Alert source log, is there an event matching the access key ID?

- If one is available in the Alert source log, is there an event time matching the session context creationdate?

- If there is not a match for an access key ID or session creationdate, then use the most recent AssumeRole* event returned by the query.

This is what we see in the Expel Workbench:

Assumed role atep back enrichment output

By the way, the robot referenced here is Ruxie.

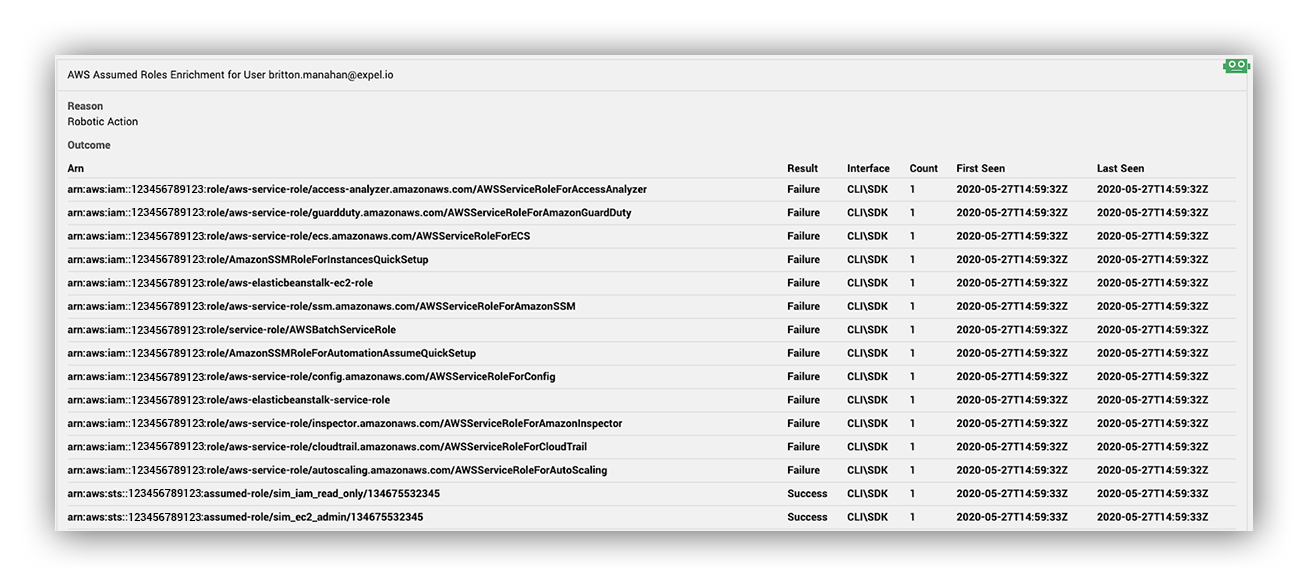

Did the alert IAM entity assume any roles?

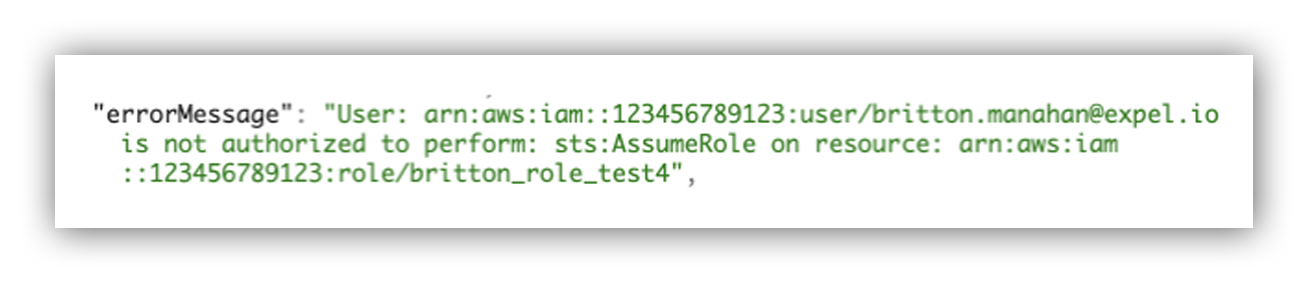

By including both successful and failed role assumptions, we can also see any brute force attempts to enumerate roles a user has access to. The status message of a failed AssumeRole event is used to determine the target role when a call to AssumeRole failed.

Failed AssumeRole errorMessage

Assumed roles enrichment output

Why do we ask this question? Because it gives analysts insight into any suspicious activity involving role assumption.

Determining how the role was assumed

Determining how the IAM role was assumed can be a valuable piece of context to have in combination with the provided role ARN, result, count and firstlast timestamps.

There are a few ways a role is typically assumed:

- SAML/Web identity integration

- AWS web console

- AWS native services

- CLI / SDK

The logic is conducted in the following steps:

- SAML or WebFederation through the API event name (AssumeRoleWithSAML and AssumeRoleWithWebIdentity)

- The Web Console by looking the invoked by field (AWS Internal), source ip and UserAgent

- An AWS Service by looking at the invoked by field

- If none of the first three criteria were matches, then the interface is determined to be the AWS CLI or SDK

In order to support successful and failed calls, recent AssumeRole* events are collected for the user associated with the alert regardless of authentication status code. The query used to gather AssumeRole* events for this enrichment will vary slightly depending on whether the source alert user is an IAM user or assumed role.

Keep in mind that this particular workflow is also “role aware.” This means that additional logic is applied when gathering relevant IAM activity. When looking at AWS alerts based on an IAM user, we surface other relevant IAM activity that matches the IAM identifier. For IAM roles, we apply a filter to include roles where the session name or source IP matches what we saw in the alert. Given that roles in AWS can be assumed by multiple entities, this additional filter helps ensure that relevant results are being retrieved.

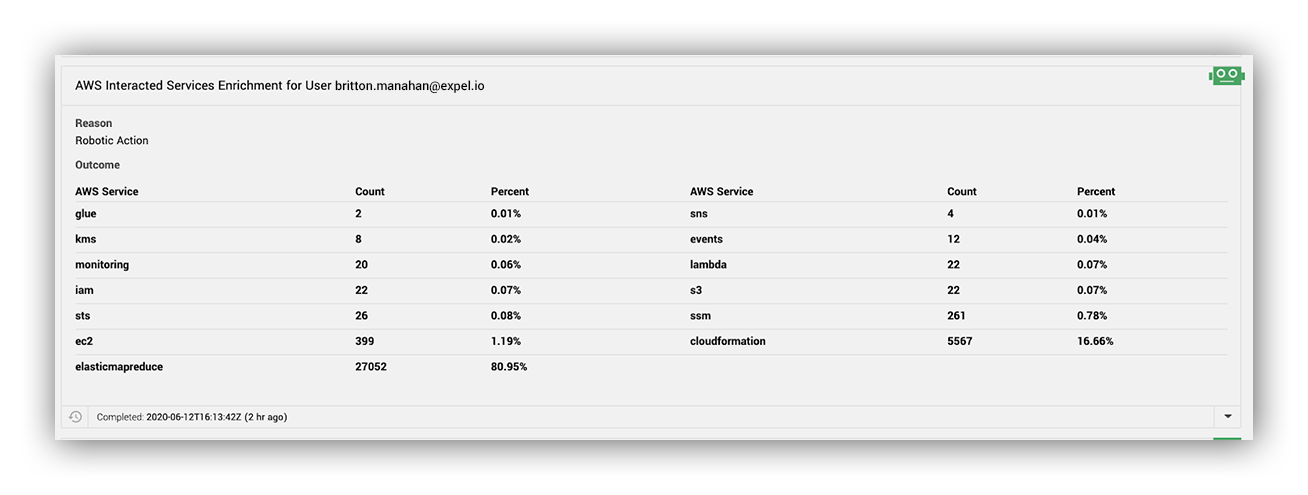

What services has the alert IAM entity interacted with?

Answering this question provides Expel analysts with a high level summary of the AWS services the IAM entity has interacted with in the past week. With over 200 services and counting, this enrichment helps provide information about both the type of activity the IAM entity is involved in and at what frequency.

This enrichment isn’t intended to act as a singular decision point, but rather help provide a simple summary of what services are in scope for the IAM entity in terms of actual interaction. When an alert moves into an investigation for a deeper dive, it also provides information on AWS services that the IAM recently interacted with that would be highly valuable for a potential attacker (EC2, IAM, S3, SSM, database services, lambda, Secrets Manager and others). Additional context applied in tandem with this enrichment can help us gather more insights, like an account used by automation deviating from the normally used services.

To determine what services a user interacted with, all of the principal’s CloudTrail events over the previous week are queried. The total of each unique value is calculated to determine counts for each AWS service the alert user interacted with.

Interacted services enrichment output

Has the alert IAM entity made any recent interesting API calls?

We define interesting API calls to be any AWS ApiEvent that doesn’t have a prefix of Get (excluding GetPasswordData), List, Head or Describe, or have a failed event status. Having details on these types of activities is critical in determining if an alert is a true positive.

After a threat actor gains access into an AWS account, they’re likely going to perform modifying API calls related to persistence, privilege escalation and data access. Some prime examples of modifying calls are AuthorizeSecurityGroupIngress, CreateKeyPair, CreateFunction, CreateSnapshot and UpdateAssumeRolePolicy.

In our recent blog post, we noted the highly suspicious output provided by this enrichment:

Expel Workbench alert example

While API calls that return AWS information, such as DescribeInstances, are going to be important when scoping recon activity for established unauthorized access, they’re extremely common for most IAM entities. However, we do include them by capturing any failed API calls when we observe a high number of unauthorized access, notably when it’s first gained for an IAM entity and a threat actor is unfamiliar with its permissions. They may be attempting to browse to different services in the console or running automated recon across services that they don’t have required permissions.

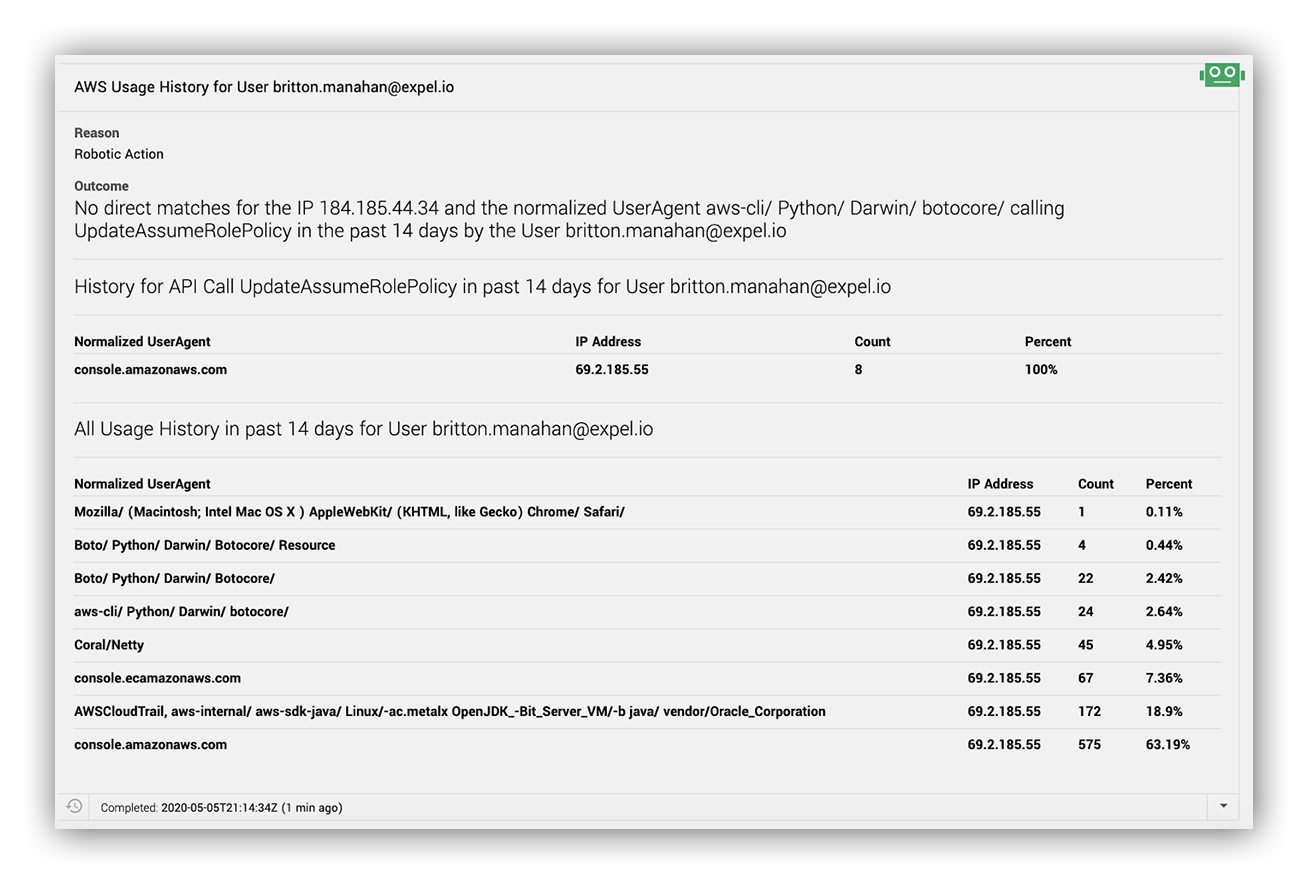

How does the alert activity align with historical usage for the IAM entity?

Lastly, we need to evaluate whether this activity is normal for this IAM entity.

To answer this question, we first need to decide on the window of time that makes up “normal activity.” We found that going back two weeks, while skipping the last 12-hours of CloudTrail activity, provides a sufficient historical view while also reducing the likelihood that we show our analysts data tainted by the recent activity related to the alert. Within the window of time we lift all API calls for the user in question along with their associated user agents and IP addresses across the two weeks.

This is when we look to CloudTrail.

We pull out activity from CloudTrail that matches an ARN or principal ID, we use different logic for assumed rules which we previously discussed.

In order to summarize this data and compare the access attributes, we slice and dice it into the following views:

- How many times has the IAM entity made this API call from the same IP and user-agent? (GuardDuty based alerts will be compared on the IP only because they do not contain user agent details.)

- What IPs and user-agent has this IAM entity historically called this API with?

- What IPs and user-agent has this IAM entity historically used in all of its API calls?

The data comes out looking like this in our Expel Workbench:

This powerful enrichment allows our analysts to quickly understand if this activity for an IAM entity is common and how they interact with AWS services.

User-agents associated provide useful insights if certain service interactions for an IAM entity are typically console based, via the AWS CLI or with a certain type of AWS SDK.

While a threat actor can easily spoof user-agents, aligning unauthorized access with an IAM entity’s historical activity is a much tougher task.

Parting thoughts and a resource for you

Rather than having analysts repeatedly perform tedious tasks for each AWS alert, these enrichments empower their decision making while simultaneously establishing standardization. The enrichment outputs work in tandem to provide a summary of both relevant recent and historical activity associated with the IAM entity to answer key questions in the AWS alert triage process.

Below is the workflow I walked you through in this post. Feel free to use it as a resource when assessing how CloudTrail logs can help automate AWS alert enrichments in your own environment.

If you have questions, reach out to me on Twitter or contact us here. We’d love to chat with you about AWS security.