Engineering · 8 MIN READ · DAN WHALEN · APR 13, 2023 · TAGS: Tech tools

We’ve written a lot about Kubernetes (k8s) in recent months, particularly on the need for improved security visibility. And we recently released a (first-to-market!) MDR solution for Kubernetes environments. Part of this journey involved overcoming a key technical challenge: what’s the best way to securely access the Kubernetes API for managed offerings like Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), and Azure Kubernetes Service (AKS)? Each cloud provider has its own middleware, best practices, and hurdles to clear. Figuring it all out can be quite the challenge—you can end up neck-deep in documentation, some of which is outdated or inaccurate.

In this post, we’ll share what we’ve learned along the way and give you the tools you need to do it yourself.

Why do this?

This is an oversimplification, but Kubernetes is really just one big, robust, well-conceived API. It allows orchestration of workloads, but can also be employed to understand what’s going on in the environment. You’ve probably used (or heard of) kubectl, right? It’s an incredibly useful tool, a client that interfaces with k8s APIs. If you can do it in kubectl, you could also go directly to the API to get the same information (and more).

Using Kubernetes APIs opens up a plethora of use cases from automating inventory of resources, reliability monitoring, security policy checks, and even automating some detection and response activities. But to perform any of these activities you need to securely authenticate to your managed k8s provider. In the following sections, we’ll walk you through how to do that securely for Google Cloud Platform (GCP), Microsoft Azure, and Amazon Web Services (AWS). If that sounds interesting, let’s get started.

Before you begin

Before we get into the tech details, it’s important to call out a few requirements.

Requirements

- Follow security best practices

- Use established patterns for each cloud provider

- Use existing vendor packages where possible (don’t reinvent the wheel)

Note

We’re going to focus specifically on accessing the Kubernetes API for Amazon Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), and Azure Kubernetes Service (AKS). We’re not going to cover getting network access to the Kubernetes API—there are too many permutations to cover, so we’ll assume you have network connectivity, whether it’s to a private cluster or a cluster with a public endpoint (maybe don’t do that, though).

Warning

The Python recipes we’re sharing below are just examples. Use them for inspiration—don’t copy and paste them into production.

What we’re solving for

Given cloud identity and access management (IAM) credentials for GCP, Azure, and AWS, and network connectivity to a Kubernetes cluster, how can we connect to the API in a way that satisfies all of our requirements?

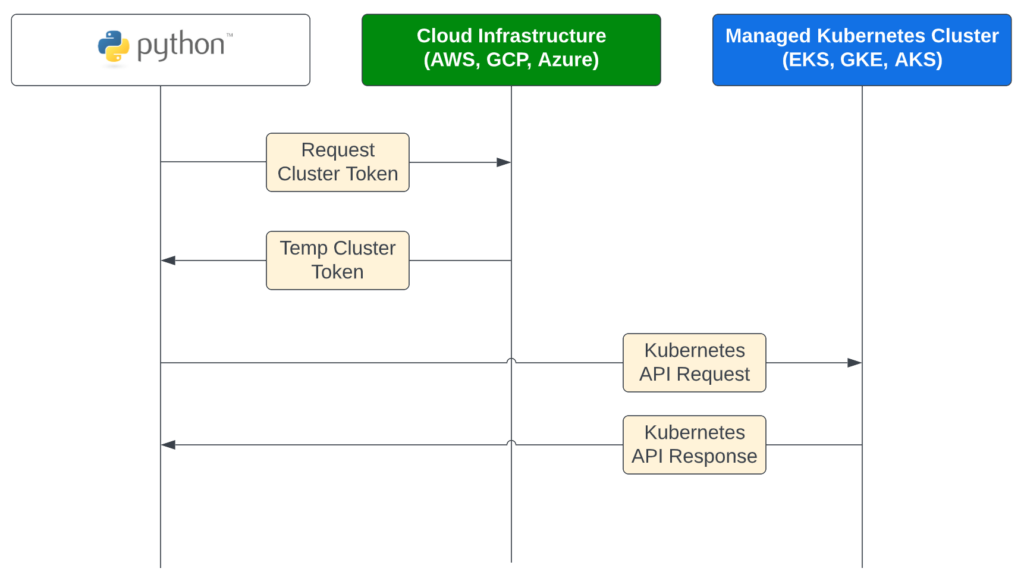

Each cloud infrastructure provider has its own managed Kubernetes offering and access patterns have some slight differences. At a high level, what we want to accomplish looks something like this:

Early on we made a key design choice: we’d strongly prefer to only deal with cloud IAM credentials. Sure, technically we could create service account tokens in Kubernetes natively and use them to access the API, but this feels wrong for a few reasons:

- Cutting service account tokens encourages long-lived credentials as a dark pattern, and we’d like to avoid this for security reasons.

- Using k8s service accounts means rules-based access control (RBAC) authorization must be managed entirely in Kubernetes with roles and role bindings. We’d like to avoid that wherever possible as it’s not very accessible, is easy to misconfigure, and can be tough to audit.

- Managed k8s services have built-in authorization middleware we can use.

Given that design, let’s take a look at the recipes for Google Kubernetes Engine (GKE), Azure Kubernetes Service (AKS), and Amazon Elastic Kubernetes Service (EKS).

Connecting to Google Kubernetes Engine (GKE)

How it works

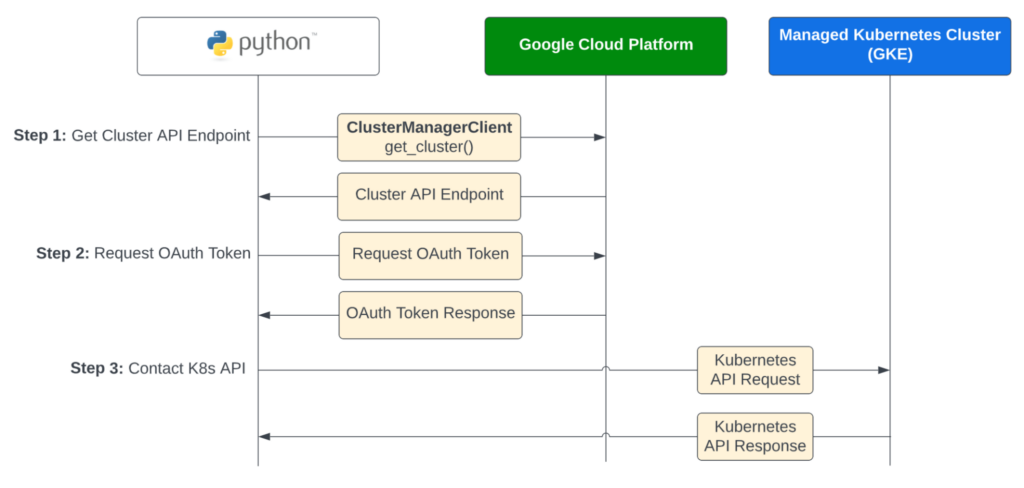

The recipe below uses a service account in GCP with a custom IAM role to access the Kubernetes API. In our view, Google has done a great job of making this simple and easy. The recipe takes advantage of existing Google SDKs to talk to the GCP control plane to get cluster details and an OAuth token for API access.

Prerequisites

- A GCP service account (not a Kubernetes service account) with generated JSON credentials

- Service account must be assigned IAM permissions to get cluster details and read data in Kubernetes (this can be adjusted based on your use case)

- Network access to your cluster’s API endpoint

Python example

import logging

import google.auth.transport.requests

from google.cloud.container_v1 import ClusterManagerClient

from google.cloud.container_v1 import GetClusterRequest

from google.oauth2 import service_account

import kubernetes.client

# Update this to your cluster ID

CLUSTER_ID = “projects/kubernetes-integration-318317/locations/us-east1-b/clusters/gke-integration-test”

# Update this to your service account credentials file

GOOGLE_CREDENTIALS = ‘google_credentials.json’

logging.info(“Retrieving cluster details”, cluster_id=CLUSTER_ID)

credentials = service_account.Credentials.from_service_account_file(GOOGLE_CREDENTIALS)

req = GetClusterRequest(name=CLUSTER_ID)

cluster_manager_client = ClusterManagerClient(credentials=credentials)

cluster = cluster_manager_client.get_cluster(req)

logging.info(“Got cluster endpoint address”, endpoint=cluster.endpoint)

logging.info(“Requesting an OAuth token from GCP…”)

kubeconfig_creds = credentials.with_scopes(

[

‘https://www.googleapis.com/auth/cloud-platform’,

‘https://www.googleapis.com/auth/userinfo.email’,

],

)

auth_req = google.auth.transport.requests.Request()

kubeconfig_creds.refresh(auth_req)

logging.info(‘Retrieved OAuth token for K8s API’)

# Build endpoint string and token for K8s client

api_endpoint = f’https://{cluster.endpoint}:443‘

api_token = kubeconfig_creds.token

logging.info(“Building K8s API client”)

configuration = kubernetes.client.Configuration()

configuration.api_key[‘authorization’] = api_token

configuration.api_key_prefix[‘authorization’] = ‘Bearer’

configuration.host = api_endpoint

configuration.verify_ssl = False

k8s_client = kubernetes.client.ApiClient(configuration=configuration)

# Use K8s client to talk to Kubernetes API

logging.info(“Listing nodes in this Kubernetes cluster”)

core_v1 = kubernetes.client.CoreV1Api(api_client=k8s_client)

print(“Retrieved Nodes:\n”, core_v1.list_node())

Connecting to Azure Kubernetes Service (AKS)

How it works

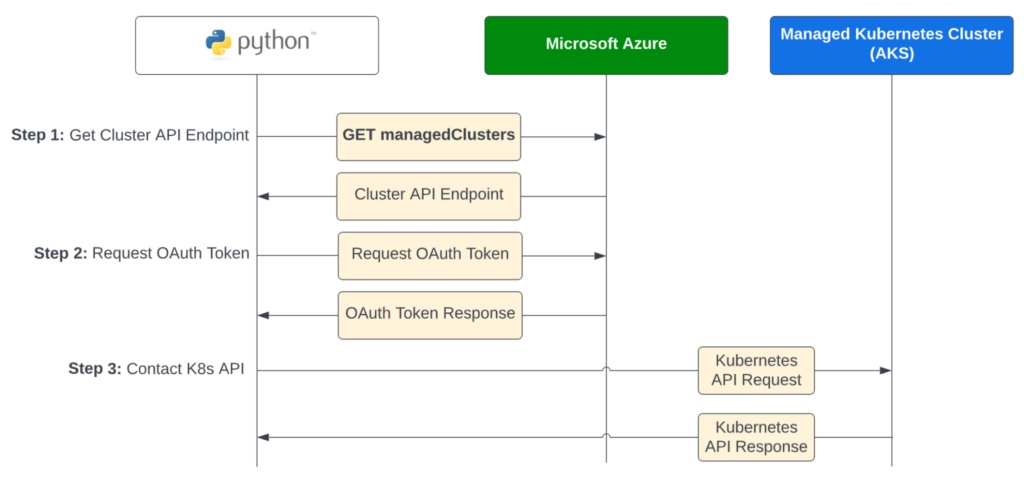

The recipe below uses an Azure application registration and a custom Azure role. Like Google, Microsoft put some thought into the linkages between Azure IAM and AKS. However, they’ve gone through multiple support iterations and offer several ways to do authentication and authorization for Azure Kubernetes Service (AKS). This can be confusing, and takes a lot of reading to figure out. Luckily, we’ve done all of that for you and can summarize.

There are three ways to configure authN and authZ for AKS:

- Legacy auth with client certificates: Kubernetes handles authentication and authorization.

- Azure AD integration: Azure handles authentication, Kubernetes handles authorization.

- Azure RBAC for Kubernetes authorization: Azure handles authentication and authorization.

We examined these options and recommend #3 for a few reasons:

- Your authentication and authorization policies will exist in one place (Azure IAM).

- Azure IAM RBAC is more user-friendly than in-cluster RBAC configurations.

- Azure roles are easier to audit than in-cluster rules.

Based on these advantages, our Python recipe below authenticates with Azure, retrieves cluster details, and then requests an authentication token to communicate with the Kubernetes API.

Prerequisites

- An Azure AD application registration

- Application must be assigned IAM permissions to get cluster details and read data in Kubernetes (this can be adjusted based on your use case)

- Network access to your cluster’s API endpoint

Python example

import logging

import kubernetes.client# Update these to auth as your Azure AD App

TENANT_ID = ‘YOUR_TENANT_ID’

CLIENT_ID = ‘YOUR_CLIENT_ID’

CLIENT_SECRET = ‘YOUR_CLIENT_SECRET’# Update these to specify the cluster to connect to

SUBSCRIPTION_ID = ‘YOUR_SUBSCRIPTION_ID’

RESOURCE_GROUP = ‘YOUR_RESOURCE_GROUP’

CLUSTER_NAME = ‘YOUR_CLUSTER_NAME’def get_oauth_token(resource):

”’

Retrieve an OAuth token for the provided resource

”’

login_url = “https://login.microsoftonline.com/%s/oauth2/token” % TENANT_ID

payload = {

‘grant_type’: ‘client_credentials’,

‘client_id’: CLIENT_ID,

‘client_secret’: CLIENT_SECRET,

‘Content-Type’: ‘x-www-form-urlencoded’,

‘resource’: resource

}

response = requests.post(login_url, data=payload, verify=False).json()

logging.info(‘Got OAuth token for AKS’)

return response[“access_token”]logging.info(“Retrieving cluster endpoint…”)

token = get_oauth_token(‘https://management.azure.com’)

mgmt_url = “https://management.azure.com/subscriptions/%s” % SUBSCRIPTION_ID

mgmt_url += “/resourceGroups/%s” % RESOURCE_GROUP

mgmt_url += “/providers/Microsoft.ContainerService/managedClusters/%s” % CLUSTER_NAME

cluster = requests.get(mgmt_url,

params={‘api-version’: ‘2022-11-01’},

headers={‘Authorization’: ‘Bearer %s’ % token}

).json()

props = cluster[‘properties’]

fqdn = props.get(‘fqdn’) or props.get(‘privateFQDN’)

api_endpoint = ‘https://%s:443’ % fqdn

logging.info(“Got cluster endpoint”, endpoint=api_endpoint)logging.info(“Requesting OAuth token for AKS…”)

# magic resource ID that works for all AKS clusters

AKS_RESOURCE_ID = ‘6dae42f8-4368-4678-94ff-3960e28e3630’

api_token = get_oauth_token(AKS_RESOURCE_ID)logging.info(“Building K8s API client”)

configuration = kubernetes.client.Configuration()

configuration.api_key[‘authorization’] = api_token

configuration.api_key_prefix[‘authorization’] = ‘Bearer’

configuration.host = api_endpoint

configuration.verify_ssl = False

k8s_client = kubernetes.client.ApiClient(configuration=configuration)# Use K8s client to talk to Kubernetes API

logging.info(“Listing nodes in this Kubernetes cluster”)

core_v1 = kubernetes.client.CoreV1Api(api_client=k8s_client)

print(“Retrieved Nodes:\n”, core_v1.list_node())

Connecting to Amazon Elastic Kubernetes Service (EKS)

How it works

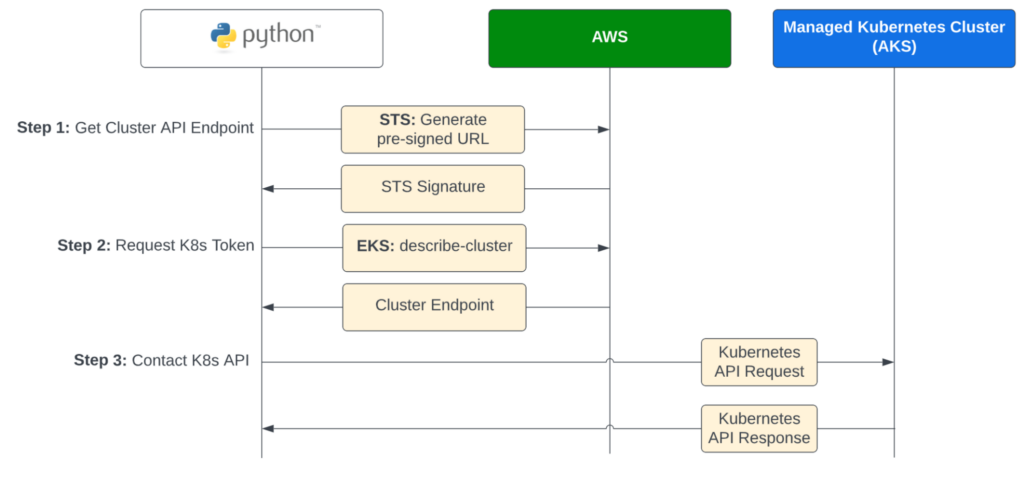

AWS clearly thought about the linkages for its cloud IAM service, but hasn’t built as robust an integration as Google or Microsoft. The end result is less than ideal. As much as we’d love to be able to keep authN and authZ management in AWS IAM, we currently don’t have that ability without installing additional third-party tools like kiam (although these tools are quickly becoming obsolete). For this recipe, we’ll focus on what’s possible with native Amazon Elastic Kubernetes Service (EKS) clusters and leave additional third-party tooling as an exercise for you, dear reader.

The recipe below uses an AWS IAM role to generate a token for EKS, which is an unusual (and not well-documented) process compared to Google Kubernetes Engine (GKE) and AKS. To generate a token, we call the STS service to generate a pre-signed URL. This returns a signature which EKS accepts as a token identifying the calling user. This token authenticates the user, but requires that we rely on in-cluster RBAC policies for authZ.

Prerequisites

- IAM role with attached policies allowing access to get cluster details and contact the API

- IAM assumes role credentials are exported as environment variables

- AWS-auth configmap is updated to grant access to IAM role

- In-cluster RBAC roles and RoleBindings grant privileges to cluster resources

Python example

import boto3

import logging

import kubernetes.clientAWS_REGION = ‘YOUR_AWS_REGION’

CLUSTER_NAME = ‘YOUR_CLUSTER_NAME’class TokenGenerator(object):

”’

Helper class to generate EKS tokens

”’def __init__(self, sts_client, cluster_name):

self._sts_client = sts_client

self._cluster_name = cluster_name

self._register_cluster_name_handlers()def _register_cluster_name_handlers(self):

self._sts_client.meta.events.register(

‘provide-client-params.sts.GetCallerIdentity’,

self._retrieve_cluster_name,

)

self._sts_client.meta.events.register(

‘before-sign.sts.GetCallerIdentity’,

self._inject_cluster_name_header,

)def _retrieve_cluster_name(self, params, context, **kwargs):

if ‘ClusterName’ in params:

context[‘eks_cluster’] = params.pop(‘ClusterName’)def _inject_cluster_name_header(self, request, **kwargs):

if ‘eks_cluster’ in request.context:

request.headers[

‘x-k8s-aws-id’

] = request.context[‘eks_cluster’]def get_token(self):

“””Generate a presigned url token to pass to kubectl.”””

url = self._get_presigned_url()

token = ‘k8s-aws-v1.’ + base64.urlsafe_b64encode(

url.encode(‘utf-8’),

).decode(‘utf-8’).rstrip(‘=’)

return tokendef _get_presigned_url(self):

return self._sts_client.generate_presigned_url(

‘get_caller_identity’,

Params={‘ClusterName’: self._cluster_name},

ExpiresIn=60,

HttpMethod=‘GET’,

)logging.info(“Retrieving cluster endpoint…”)

eks_client = boto3.client(‘eks’, AWS_REGION)

resp = eks_client.describe_cluster(name=CLUSTER_NAME)

api_endpoint = resp[‘cluster’][‘endpoint’]

logging.info(‘Got cluster endpoint’, endpoint=api_endpoint)

logging.info(“Retrieving K8s Token…”)

sts_client = boto3.client(‘sts’, AWS_REGION)

api_token = TokenGenerator(sts_client, CLUSTER_NAME).get_token()

logging.debug(‘Got cluster token’)

logging.info(“Building K8s API client”)

configuration = kubernetes.client.Configuration()

configuration.api_key[‘authorization’] = api_token

configuration.api_key_prefix[‘authorization’] = ‘Bearer’

configuration.host = api_endpoint

configuration.verify_ssl = False

k8s_client = kubernetes.client.ApiClient(configuration=configuration)

# Use K8s client to talk to Kubernetes API

logging.info(“Listing nodes in this Kubernetes cluster”)

core_v1 = kubernetes.client.CoreV1Api(api_client=k8s_client)

print(“Retrieved Nodes:\n”, core_v1.list_node())

Conclusion

Our Workbench platform runs on Kubernetes. We’ve been building on k8s for many years now and are excited to help organizations secure it. Kubernetes can be a bit intimidating, especially if you haven’t had hands-on experience. We hope by sharing our insight we can advance the state of Kubernetes security more generally and get security teams more involved.

We can’t wait to see what people build…