SOC · 8 MIN READ • , · JUN 2, 2021 · TAGS: leadership & management

There’s a common assumption that there will always be tradeoffs between scale and quality.

When we set out to build our security operations center (SOC) here at Expel, we didn’t want to trade quality for efficiency – it just didn’t feel right.

So, when we started the team we pledged that quality and scale would increase together. Our team’s response: challenge accepted.

That commitment to quality now extends to every aspect of our operations – our customers can see everything we do. So our work has to be fast and it’s got to be good.

In previous posts, we’ve talked a lot about how we’ve scaled our SOC with automation. This is something of a sweet spot for us – we’ve had some pretty good wins and learned a ton about metrics along the way.

In this post, we’re going to walk you through the quality end of the equation – how we measure and manage quality in our SOC. Along the way, we’ll share a bit about the problems we’ve encountered, how we’ve thought about them and some of our guiding principles.

TL;DR: quality is not based on what you assert, it’s based on what you accept. It’s not enough to say, “We’re going to do lean six sigma” – you have to inspect the work. And it’s how you inspect the work that matters.

A super quick primer on quality

If you’re new to quality and need a quick primer, read along. For folks who already know the difference between quality assurance and quality control, feel free to jump ahead.

There are two key quality activities: quality assurance (QA) which is meant to prevent defects, and quality control (QC) which is used to detect them once they’ve happened.

In a SOC, QA are the improvements you build into your process. It’s anything from a person asking, “Hey, can I get a peer review of this report?” to an automated check (in our case, our bots) saying, “Tuning this alert will drop 250 thousand other alerts, are you sure?”

You probably have a ton of these checks in your SOC even if you don’t call them QA. They’re likely a build up of “fix this”, “now fix that” type lessons learned.

QC is a bit different. It measures the output of the process against an ideal. For example, in a lumber yard, newly milled boards are checked for the correct dimensions before they’re loaded onto a truck.

Quality control consists of three main components:

- What you’re going to measure

- How you’re going to measure it

- What you’re going to do with the measurements

What you measure

For a mechanical process – like those newly milled boards – it’s possible to check every board using some other mechanism.

For investigations that require human judgement, we realized that anything more than trivial automated QC quickly became unsustainable – in our SOC we run ~33 investigations a day (based on Change Point analysis), and can’t have another analyst check over each one or we’d spend most of our time chasing ghosts. (There’s that darn scale and quality tradeoff.)

We didn’t give up though – we just figured out that we needed to be more clever in how we apply our resources.

We decided on two things:

- We would sample from the various operational outputs (like investigations, incidents and reports). Turns out, there’s an industry standard on how to do that!

- We would ensure the sample was representative of the total population.

Following these two guidelines, we use our sample population as a proxy for the larger output population. By measuring the quality in our sample, we can determine if our process is working at a reasonable level.

ISO 2859-1 – Acceptable Quality Limits (AQL) has entered the chat.

TL;DR on ISO 2859-1:

- You make things AKA your “lot.”

- AQL tells you how many items (in our case: alerts/investigations/incidents) you should inspect based on how many you produce to achieve a reasonable measure of overall quality.

- AQL also tells you how many defects equal a failed quality inspection.

- If your sample contains more defects than allowed by the AQL limit, you fail. If not, you pass.

- There are three inspection levels. The better your quality is, the less of your lot you have to inspect. The worse your quality is, well, you’re inspecting more.

- Here’s an AQL calculator that I’ve found super helpful.

Reminder: Quality is not based on what you assert, it’s based on what you inspect.

Let’s put this into practice using some made-up numbers with our actual SOC process:

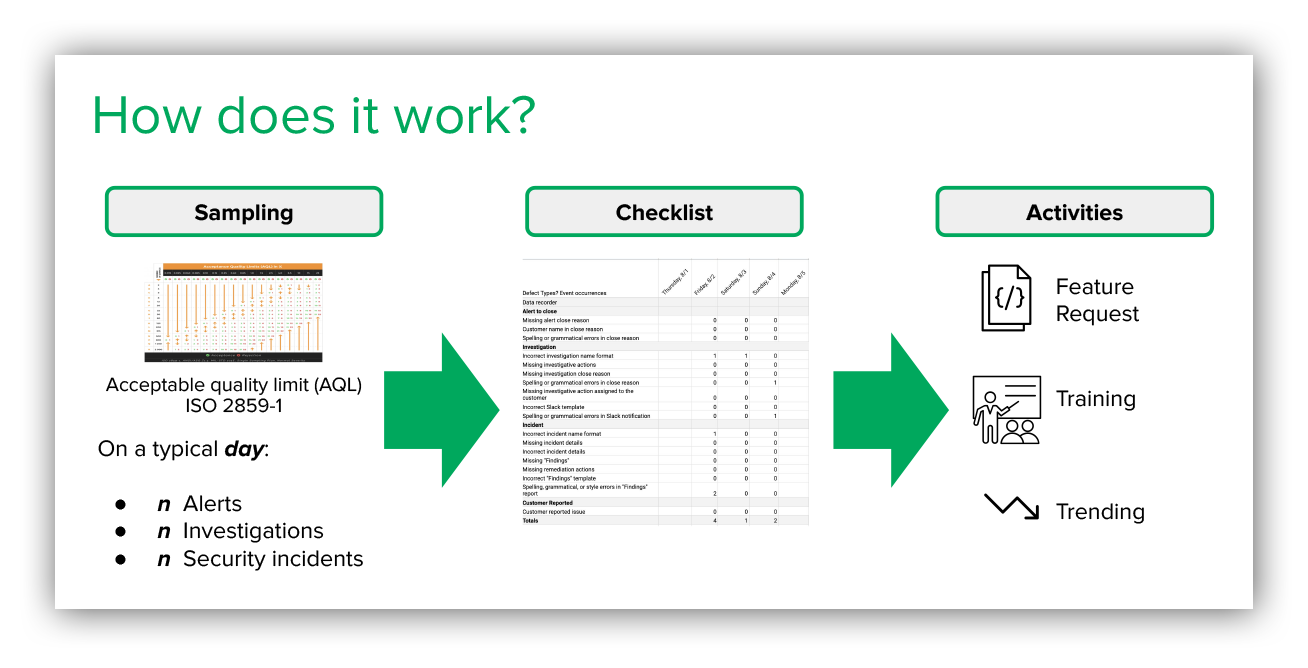

On a typical day in our SOC:

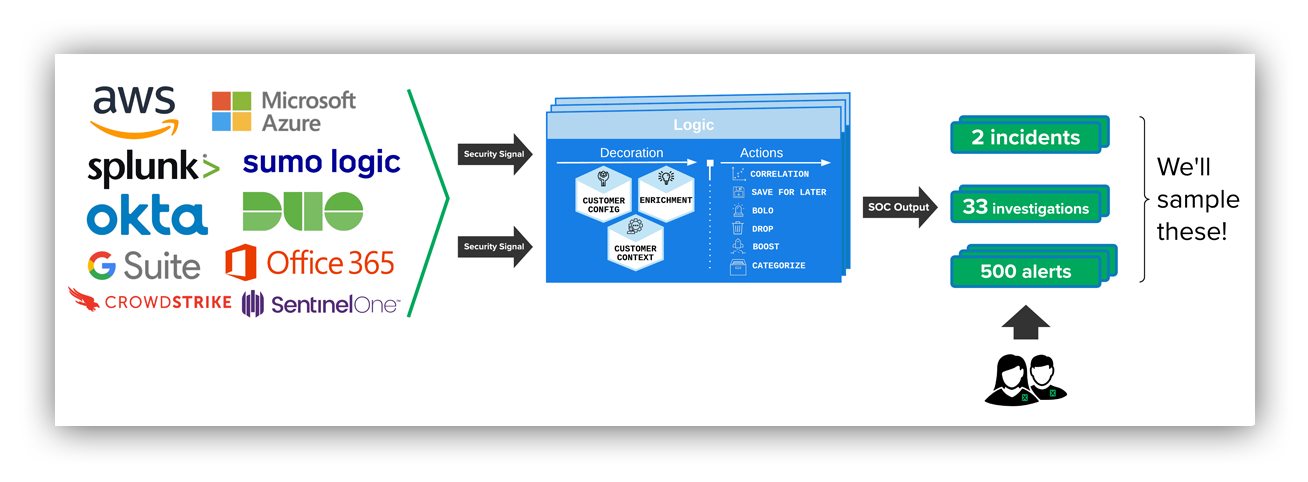

- We’ll process millions of events using our detection bot we’ve named Josie.

- Those millions of events will result in about 500 alerts sent to a SOC analyst for human judgment.

- About 33 alerts will result in a deeper dive investigation; and

- Two to three security incidents.

The image below gives a high-level visual of how the system works. You’ll see that security signals come in, are processed with a detection engine and then a human takes a sample of the data and applies their expertise to determine quality.

High level diagram of Expel detection system

The chart below shows that we break our SOC output up into three lots.

| SOC output | |

| Work item | Daily lot size |

| Alerts | ~500 |

| Investigations | 30 |

| Incidents | 2-3 |

Recall that with AQL there are three inspection levels (I, II, III).

We use General Inspection Level I at Expel. Reminder: this assumes quality is already good and it’s also the lowest cost.

If you’re just getting started, it’s OK to start with Level I. If, after inspecting, you find your quality isn’t that great – it’s time to move up a level.

Now, this is the point where you can manually review AQL tables or you can use an online AQL calculator to make things a bit easier.

Let’s try this with our alert lot.

Our lot is about 500 alerts. My General Inspection Level is I, so I’m going to set an AQL limit of no more than four defects.

AQL indicates we should review 20 alerts to have a sample that’s representative of the total population of alerts.

If we apply the same methodology across all of our work, here’s what our sampling ends up looking like:

| SOC output aka “lot size” vs. sample size | ||||

| Work item | Daily lot size | General Inspection Level | Sample size | AQL Limit |

| Alerts | ~500 | I | 20 | 4 |

| Investigations | 30 | I | 5 | 4 |

| Incidents | 2-3 | I | 3 | 4 |

How you measure

Once we’ve arrived at a sample population, we need a way to measure it against what’s considered good.

As we built our process, we decided to adhere to a mantra:

- Our measurements must be accurate and precise

We need a way to measure accuracy – it has to represent the true difference between an output and the ideal.

If you’re stuck on this, we found the best thing to do is try to convert whatever we were looking at into a number. For example, if you need your report to have high-quality writing, try a grammar score rather than relying on judgements (“the author’s use of metaphor was challenging…”).

In addition to being accurate, we need measurements to be precise. In other words, it needs to be reproducible – we work in shifts and if one shift is consistently easier graders, it’s going to cause a problem.

For us, that’s where the QC check sheet came in.

A QC check sheet is a simple and easy way to summarize things that happened.

Think about your car’s safety inspection. After the super fun time of waiting in line, the technician walks through a series of specific “checks” and collects information about defects detected. Wipers? Check! Headlights? Check! Brakes? Doh!

If all things check out, you pass inspection. If your brakes don’t work (major defect), you fail inspection.

Like a car inspection at an auto shop, our team performs an inspection; the inspector will follow a series of defined checks and the outcome of each check will be recorded and scored.

If you’re wondering what our SOC QC check sheet looks like, you can go and grab a copy at the end of this post.

Putting it all together

We have our sample size. We have our QC check sheet. But how do we go about randomly selecting our sample?

A Jupyter notebook of course!

We use the python pandas library to draw samples at random. We collect these samples from work that happened during the day and night hours.

We perform quality inspections every day. Let’s walk through one:

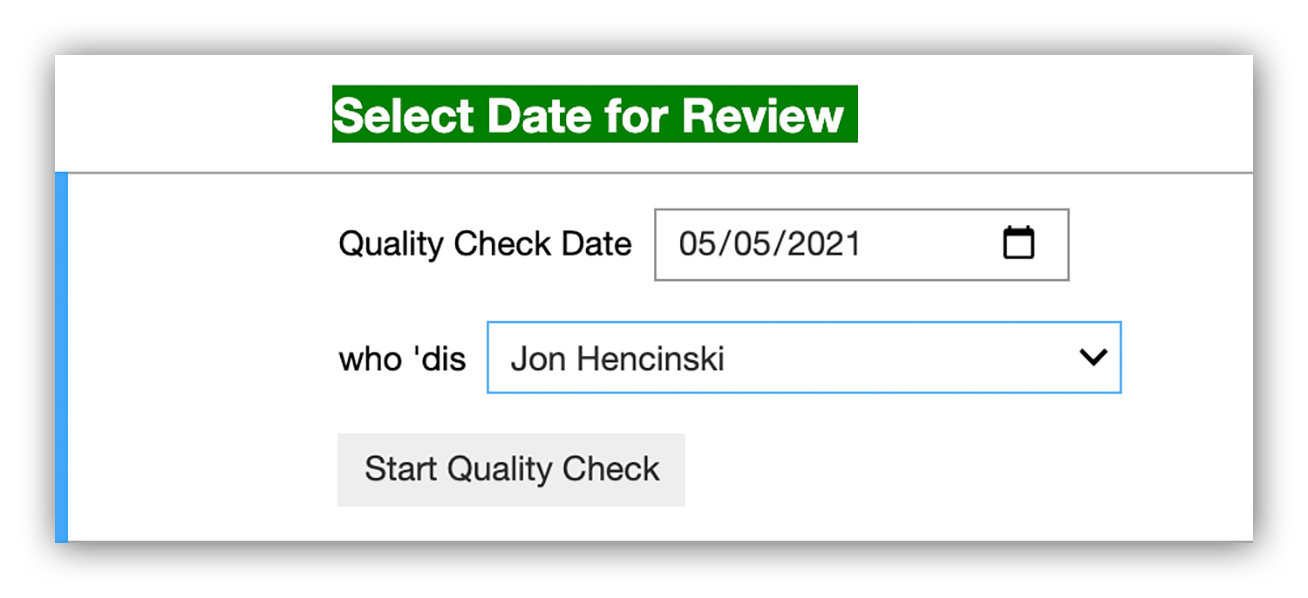

We open our Jupyter quality control notebook and select which day’s work we want to inspect. We record the inspector, smash the “Start Quality Check” button and then we’re off to the races.

Expel SOC QC check initialization step

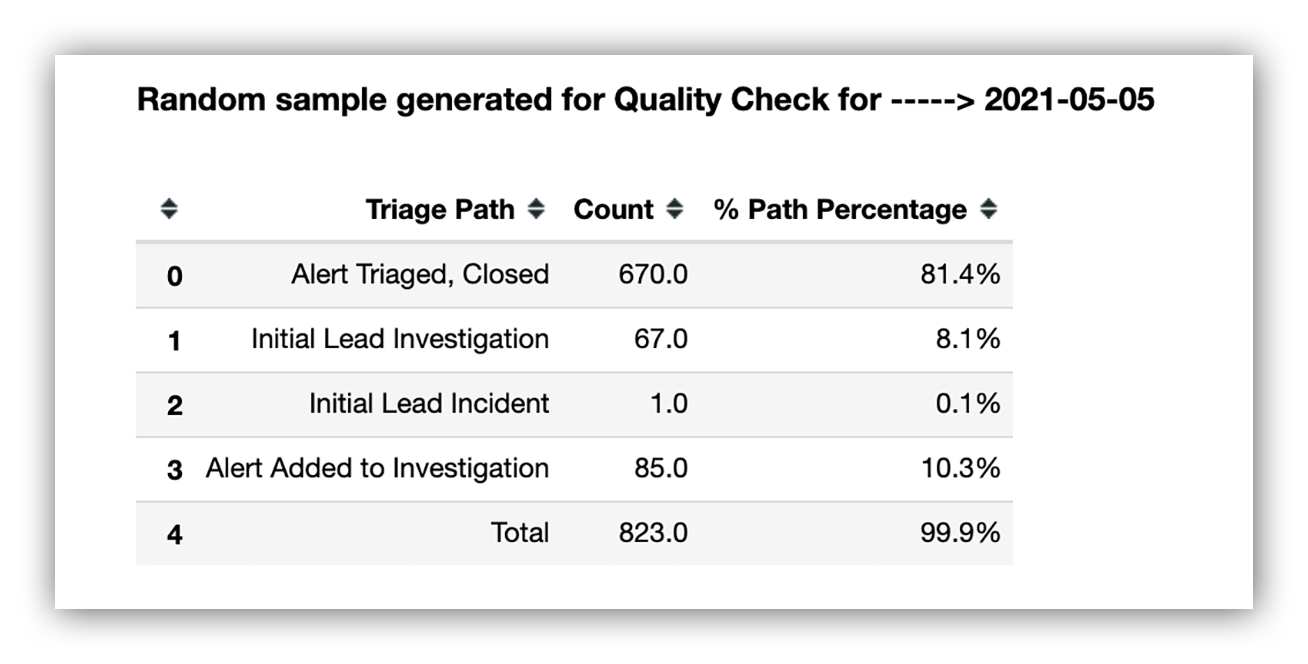

Our Jupyter Notebook reads from Expel Workbench™ APIs to determine how much work we did on May 5, 2021.

In the table below you can see we triaged 672 alerts, performed 67 investigations, ran down one security incident and moved 85 alerts to an open investigation.

Expel SOC QC random selection step

You may be thinking: but you said you typically handle about 500 alerts per day and 30 investigations. Great catch. Change-Point Analysis is your huckleberry for determining your daily mean. (More details on Change-Point Analysis here.)

Then our Jupyter notebook will break our work out into three lots as seen in the image below. Selecting the button within each section will pull in the random sample size based on an AQL check sheet specific to each lot.

Expel SOC QC notebook lots

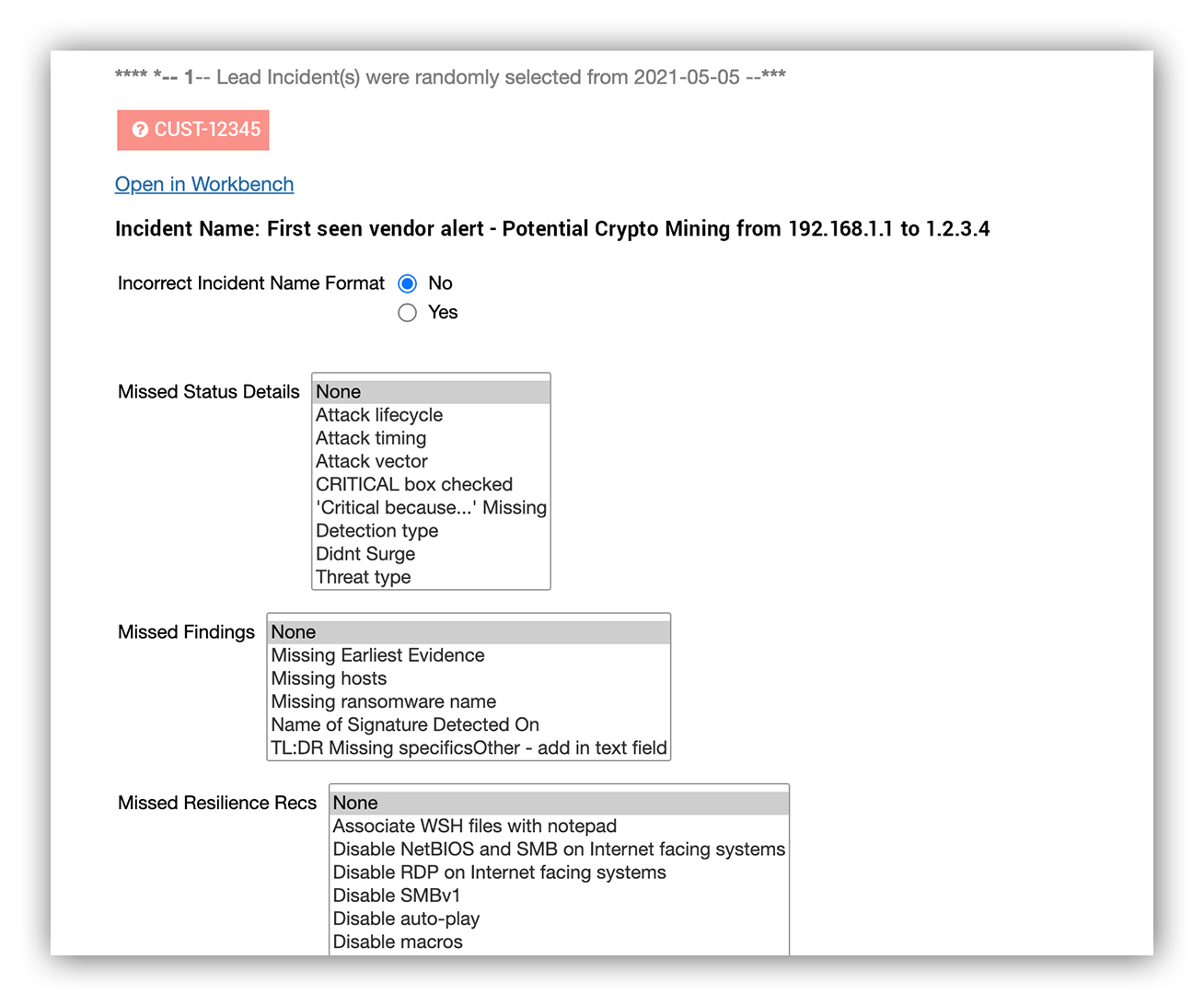

On May 5, 2021, we handled one security incident. Let’s walk through our inspection.

Going back to the image above, if we select the “Review incidents” button, our notebook will show the sample and the QC check sheet specific to that class of work. In this case, we’re looking at incidents.

Expel SOC QC incident check sheet

Our QC check sheet is focused on making sure we’re following the right investigative process.

Did we take the right action? Did we populate the right remediation actions? Did we zig when we should have zagged?

When a defect is found, we remediate the issue, record it and then trend defects by work type. If we exceed a certain number of defects for that day, we fail based on AQL.

What you’re going to do with the measurements

If we imagine this QC thing as a cycle, what we’re trying to do is (a) measure the quality, (b) learn from the measurements and (c) improve quality. In order to do this, we decided we needed our quality metrics and process to have three attributes:

- The metrics we produce are digestible

- Our quality checks are performed daily, for every shift

- What we uncover will be reviewed and folded into improvements in the system

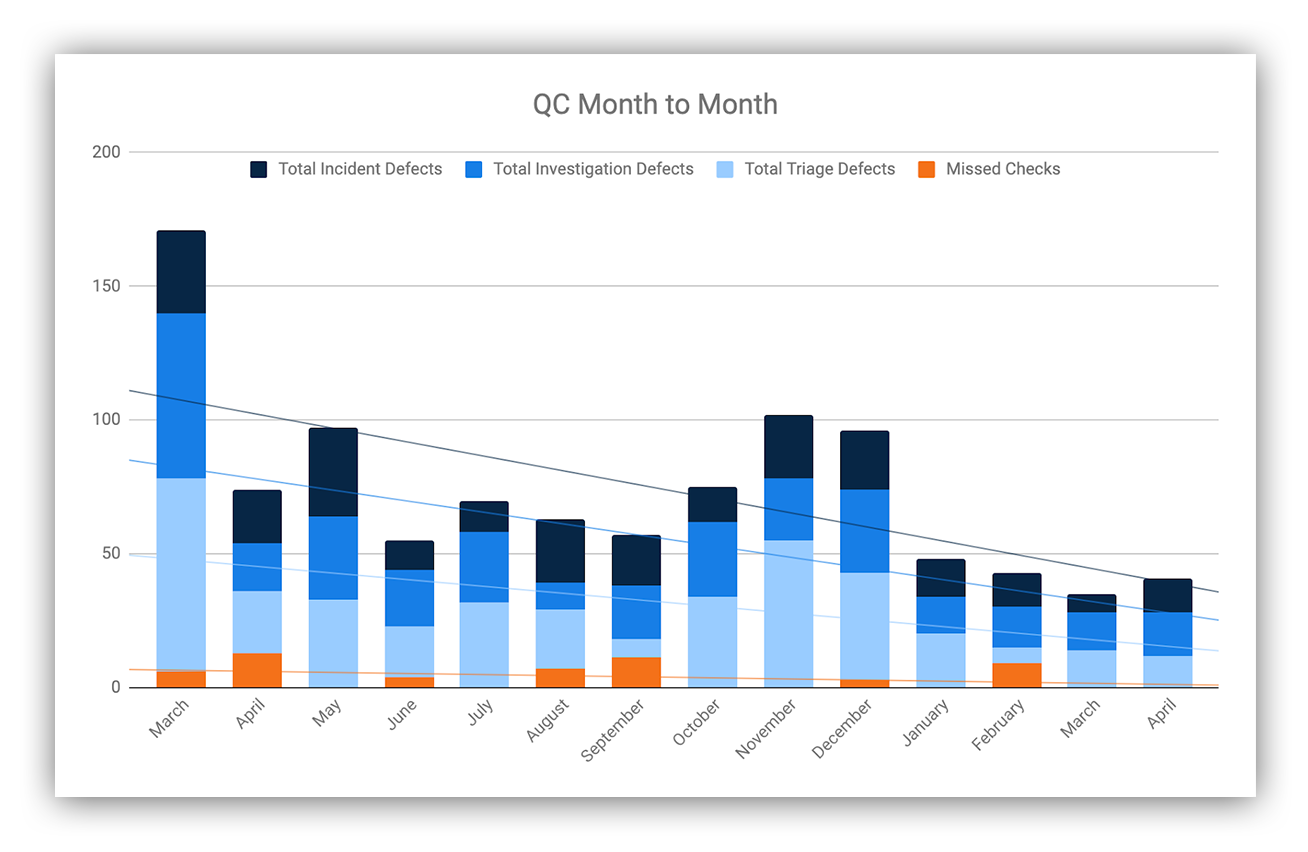

So, from the process above, we can roll up our pass/fail rate as the one digestible metric, which allows us to see where we’re struggling:

Expel SOC quality pass / fail rate since March 2020

We then deploy technology, training and mentoring to make sure the quality and scale improve over time.

In fact, our SOC quality program is a key driver in a number of recent initiatives including:

- Automated orchestration of Amazon Web Services (AWS) Expel alerts. We detected variance with respect to how each analyst investigated AWS activity, so we automated it.

- Automated commodity malware and BEC reporting. Typed input is prime for defects. As you can imagine, we detected a good number of defects in the “findings” reports for our top two incident classes, so we automated them. Scale and quality both went up!

Recap and final thoughts

Here’s a super quick recap on what we just walked through:

- Use ISO 2859-1 (AQL) to determine sample size.

- Jupyter notebooks help you perform random selection.

- Inspect each random sample using a check sheet to spot defects.

- Count and trend the defects to produce digestible metrics to improve quality.

- Run the QC process every 24 hours.

Steal this mental model:

Expel SOC QC mental model

And remember: you don’t have to trade quality for efficiency.

We hope this post was helpful. We wrote this post because this is something that would have really helped us when we started down the path of measuring SOC quality.

We’d love to hear about your quality program. What works? What didn’t? Success stories? We’re always on the lookout for ways to improve.