Rapid response · 6 MIN READ · BEN SOLOWAY, TYLER WOOD, DAVID OVIATT AND HAROLD HARDING · SEP 7, 2022 · TAGS: Guidance / Phishing

Recently, our SOC witnessed just how quickly attackers can start a phish fry with the spoils of a successful campaign. I don’t think anyone will be too surprised by this, but hackers don’t dilly-dally.

When a phishing alert sounded just after midnight EST, it first seemed like a standard fare document-sharing phish. By the time the 89th and final submission was in the bucket, we knew a large-scale campaign had successfully hit a customer. In fact, we caught a suspicious login attempt from one of the compromised accounts shortly after we detected the submission of credentials to a phishing site.

Let’s walk through how we triaged the alert.

But a campaign is nothing new…

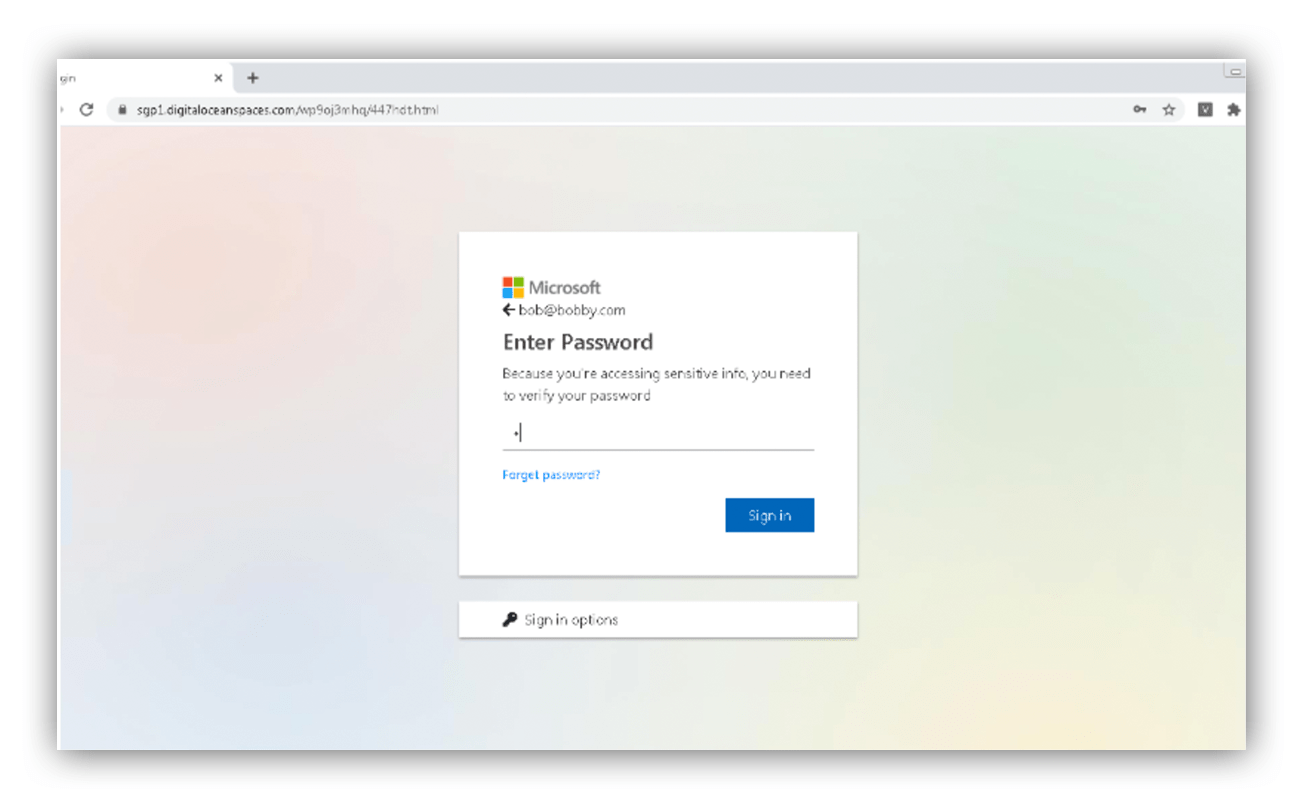

Credential harvesters are one of an attacker’s bread and butter initial access tactics, so no surprise so far. An initial URL redirects to a fake login portal (often Microsoft-themed), and sandbox analysis often tells us where credentials get transmitted.

But this particular campaign was really large. It hit users suddenly, and unlike so many others we see, it actually worked. A couple of users got tricked into submitting credentials, and not long after, we saw those credentials used in a login attempt.

So why are we talking about a large, run-of-the-mill phishing campaign? Well, this one had some interesting features, including a browser extension, multiple senders, and different initial URLs. It’s a good example of things going right during triage. And finally, it demonstrates the value of automation for enriching and expediting the process.

How it went down

The first alert was nothing surprising. The sender was from an external account and the body of the email was basically a single image stating that someone had shared a document for review.

Our phishing team is a big fan of Ruxie™, one of the robots we use to automate and enrich the details of a case. She’s fantastic at parsing essential information for our analysts. This includes basics like sender addresses, reply-to’s and return-paths, as well as embedded URLs in any given email body, whether in image references, hyperlinks, or text. She even runs API queries to pull back email reputation details and available Clearbit information on the sender organization. We use these pieces of information to orient ourselves to the story of each email, and then take action if needed.

In this case, one of our team members reviewed the initial alert in our phishing queue, examined some of Ruxie’s details, and immediately knew something was phishy. The analyst moved the alert into investigation status and started inspecting key features.

If we determine malicious intent, we need to know where an attacker is directing victims, so that we can use logs to determine if any users mistakenly took the bait. We’ll usually submit a URL to Ruxie, who integrates with a sandbox analyzer and further enriches the outcome information right from Workbench.

Here, the analyst took the embedded URL and followed it to a fake document review page hosted on a Jotform domain. He clicked on the “review document” link and… that’s where things ended. The fake login portal wouldn’t manifest.

There are tons of reasons this might happen ー most often the companies hosting the phishing content get wise to it and shut the page down. But sometimes the attackers are savvy enough to use sandbox detection mechanisms, which make IOCs a little tougher to track down. Our analyst began to fiddle with the URL to see if he could get to the goods.

Meanwhile, deep in the virtual SOC, more alerts started hitting the queue. Other analysts determined quickly that these were likely malicious emails. However, as part of our orienting process, they also recognized that the first analyst was working on a submission with the same sender domain, so these were likely related. After some quick discussion, we agreed that a campaign was under way and began adding the new alerts to the investigation. Ruxie quickly picked up on this and began adding the additional submissions, freeing the team up to focus on “human” analysis tasks.

Then another related submission hit the queue. A different sender domain, a slightly different embedded URL, nearly identical body… clearly the same campaign. At this point, the alerts were coming in pretty quickly, and our initial analyst was seeing several different flavors of the same email. Another analyst jumped in to help track down IOCs and investigate whether there was evidence of compromise, and this latest email was the ticket.

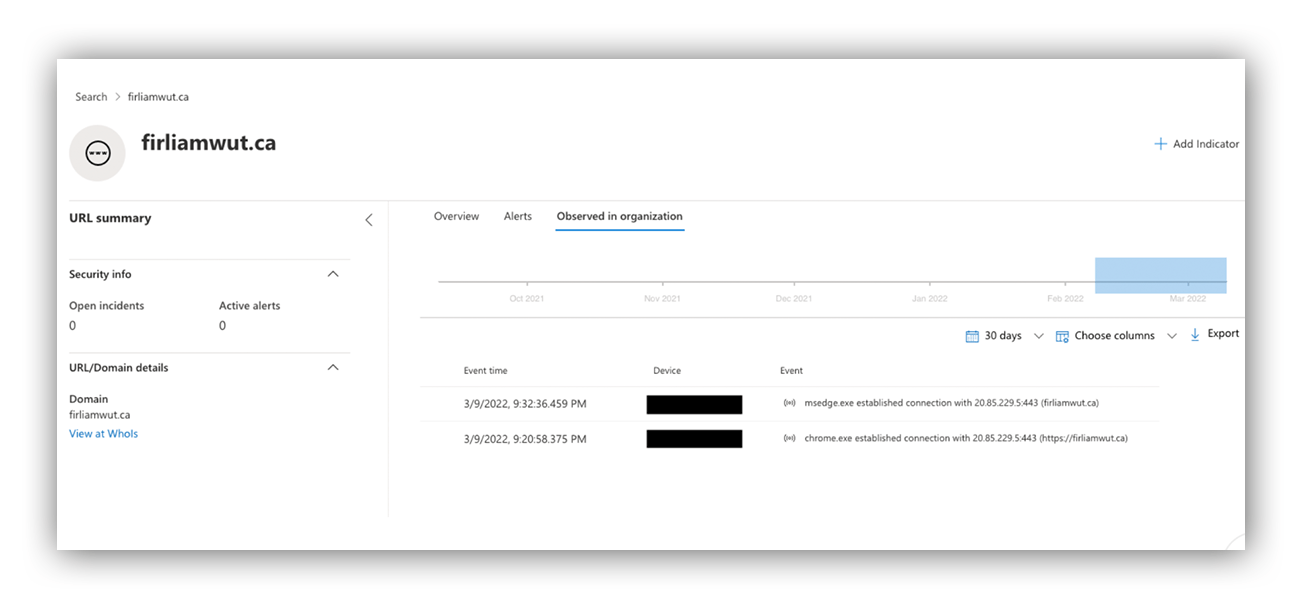

As far as we can tell, the bulk of the initial submissions led to pages that had already been taken down by the content-hosting platforms. But this latest one was still live, and we were able to determine exactly where credentials were being sent 一 an 18-day-old domain with a Canadian TLD. So we launched some Workbench queries to our customer’s endpoint technology to see if any successful network connections had been made to the malicious URLs and domains. Unfortunately, we started returning some results.

This doesn’t always mean credentials are compromised. Often we’ll see the recipient of a phish click a link and make it as far as the fake login portal before they realize the page is bogus and close out. They then submit the phish to us for analysis, and we’ll see those connections, but there’s no evidence of compromise.

However, during our sandbox analysis, we were only observing post-method requests to the new domain, and those were only after credentials were submitted to the harvester. This means any traffic to the new domain observed in the customer environment is assumed to be the submission of credentials, and thus evidence of an active compromise.

Submission of credentials to an attacker constitutes an incident requiring immediate action. Because our Workbench queries were returning results, we wanted to verify them by pivoting into the endpoint tech (in this case Defender ATP) before we promoted the investigation and woke the customer up. We submitted queries for the domain to the console, and… Shazam! We confirmed that two users had made successful network connections to the malicious domain via Chrome/Edge.

An interesting twist on an old method

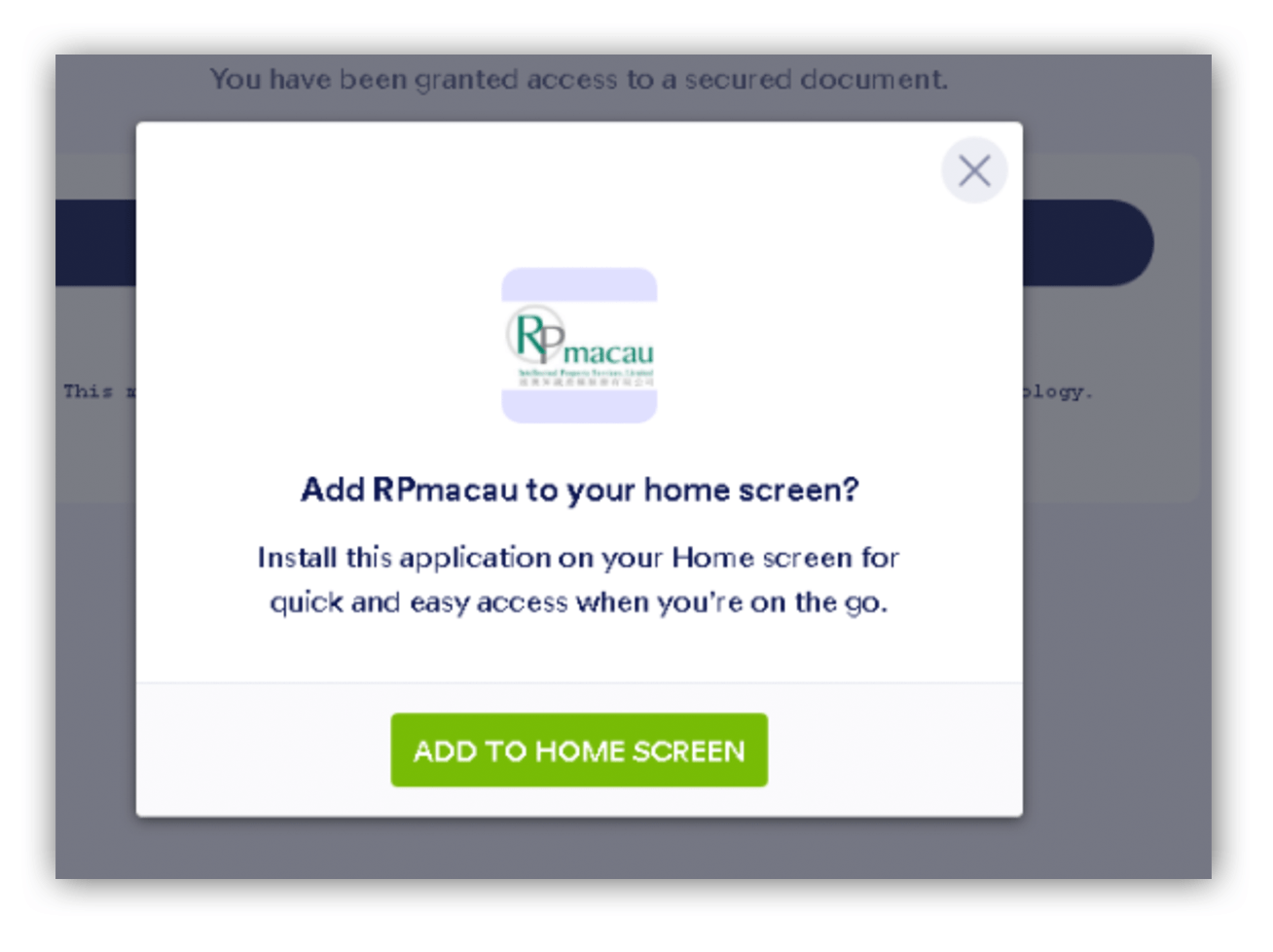

Legitimate services are often employed in phishing attempts. It’s nothing new to see a commonly used service in a malicious email to add a sense of legitimacy. Jotform, a legitimate file-sharing service, was abused during this attack, but the attackers used a trick we hadn’t seen before. Jotform has a feature that adds an extension to the browser, and the purpose of the extension is for quick access to forms that can be managed through Jotform’s premade templates. The attackers leveraged this feature to open the credential harvester in a separate window, which also acted as a bookmark to bring the user directly to the malicious login page.

Threshold met. Incident created. Customer notified.

At this point, the investigation was promoted to an incident and notifications were sent to the customer’s security team automatically. Our focus shifted to getting remediations to them as quickly as possible, with the most important task being resetting credentials. We quickly blocked the malicious domains, removed the emails, and blocked the senders. We also clarified some of our findings with the customer’s security team. Once the customer acknowledged our findings the incident was assigned over to them. The phishing team concluded its investigation and returned to triaging alerts as normal.

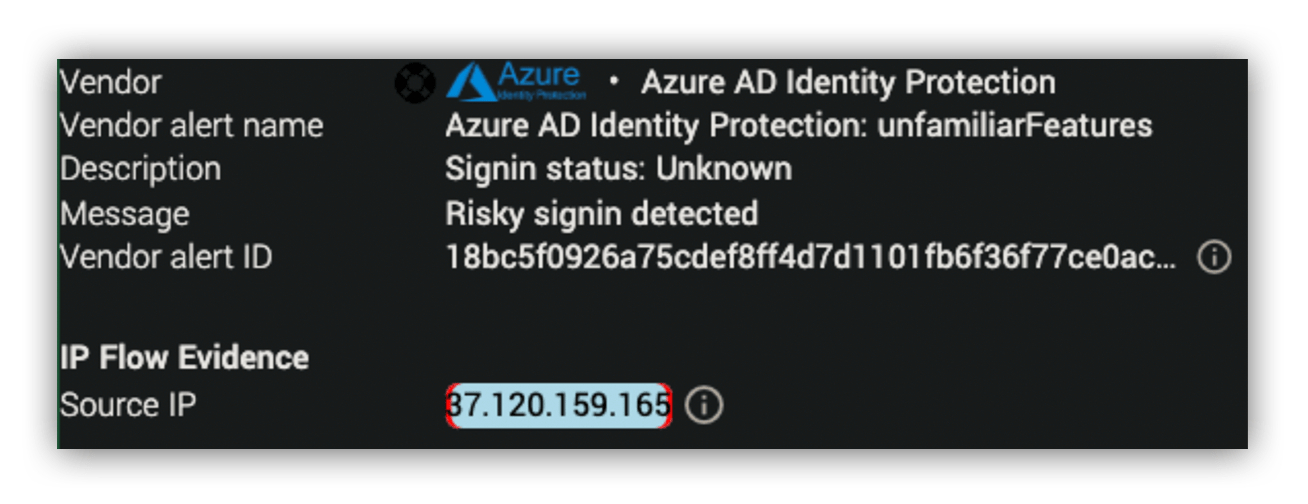

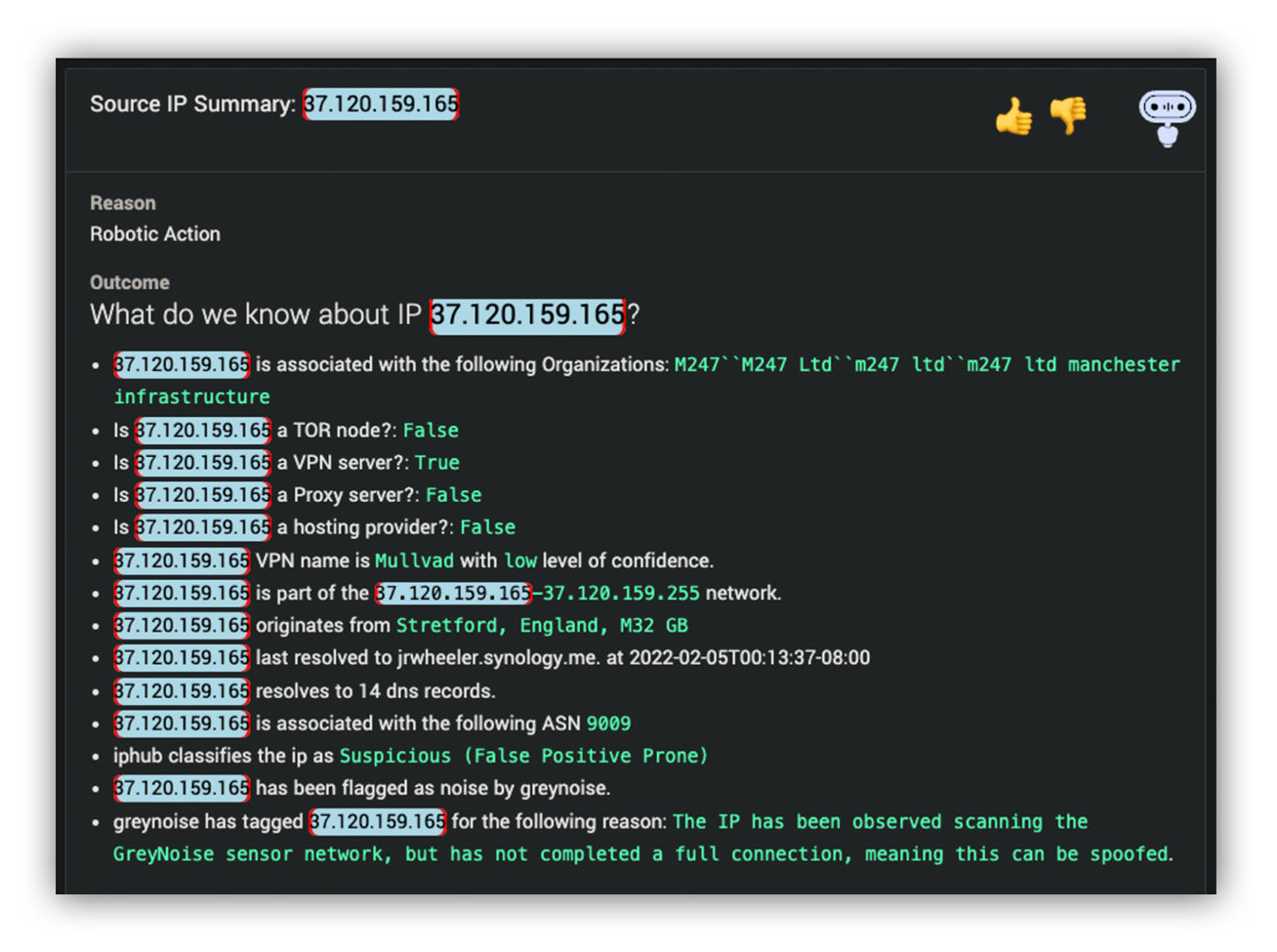

Of course, this isn’t quite where the story ended. A few hours later, an Azure AD Identity Protection alert fired for a risky sign-in associated with one of the compromised accounts from the phishing incident. An MDR analyst picked up the alert, and immediately realized that it was suspicious.

Our analysts have a lot of options for enrichment and correlation, and a few quick searches revealed that the account was part of the phishing incident. The analyst informed the customer’s security team of the login attempt and quickly received confirmation that credentials for the account had already been reset. However, for tracking purposes, and to allow the customer to test some internal automation, we elevated the login alert to an incident.

Some takeaways

It’s essential that when we promote a campaign to an incident, the customer is notified immediately so they can respond. In this case, they did take action, and that’s a win, especially since it may have prevented an attacker’s successful login.

More importantly it demonstrates redundancy of detection at its best. Our phishing team caught the successful credential harvesting and our MDR team caught the login attempt. If one had failed, the other would have caught it, and our customer would have been notified quickly either way. That’s a win as well.

We’ll leave you with a few other thoughts for you to nosh on:

If nothing else, this case serves as a reminder of just how quickly attackers can transition from stealing the keys to knocking on the front door. They aren’t waiting around for your weekend to be over or for you to get back from the gym. They’re going to take action quickly.

Make sure your colleagues know what to look for, and make it easy for them to report. Educate your organization. 89 submissions to Expel doesn’t reveal the full scope of the campaign. It only tells us how many users saw it, were sharp enough to recognize it as a phish, and then proactively submit it as malicious. If the submitters had simply ignored the email, the phishing team obviously couldn’t have recognized the compromise. Quite honestly, 89 submissions for a phishing campaign? That’s not bad. And yet…

With a phishing campaign this size, you’re more likely to see a weak link in the organization compromised. Don’t ignore a large-scale campaign. Give those IOCs a second pass because an adversary only has to succeed once. These campaigns often have slightly different URLs and redirects, but transmit credentials to the same place. Try to understand the end-goal so you can stop them.