Cloud security · 6 MIN READ · IAN COOPER, CHRISTOPHER VANTINE AND SAM LIPTON · AUG 3, 2021 · TAGS: Guidance

Let’s face it: Detection and response in the cloud is greenfield. Although it feels like every other month we’re reading about the latest cloud compromise, there are often few details about exactly what happened and what you can do to prevent the same kind of thing happening to you.

Organizations are just getting comfortable figuring out where their attack surface really is in the cloud. For these reasons, Expel’s approach to detection research has required some creativity.

How does one build a detection strategy essentially from scratch?

In our case, we like to work together to fundamentally understand a new technology, get up to date with where the community has taken the threat research, theorize any additional threat models against that tech and then build a strong overall detection strategy as a team.

The Detection and Response Engineering team at Expel in many ways operates like a software team. To help us organize work, we use the Scrum framework and generally operate in one or two week “sprints” – timeboxed iterations of our detection strategies. Recently, we ran a two week Google Cloud Platform (GCP) detection sprint and wanted to share our process.

Interested in how you can start building a strategy of your own? Strap in and we’ll take you along for the journey to building this process here at Expel.

Our process for building detections

Threat modeling and threat research is no small task. We like to take some time here and we usually take the entire first week of our detection sprints to do some reading (hello API docs!), let ideas bake, challenge each other and do any sort of experimentation in cloud infrastructure environments to test security boundaries.

In general, our process follows the steps below.

- Ideate: Throw ideas against a wall.

- Evaluate: Test the ideas.

- Create: Release the dragons.

- Appreciate: Celebrate (and monitor performance).

Ideate

It all starts with finding inspiration and brainstorming detection ideas. Oftentimes, our detection sprints build upon previous iterations of detection work and research, and the result is a deeper understanding of the technology at hand (and it’s security shortcomings).

One good place to start with GCP, or any cloud infrastructure, is understanding their implementation of Identity and Access Management (IAM). If you’re feeling green in GCP and want to learn how GCP IAM compares to the other cloud providers, Dylan Ayrey and Allison Donovan break it down very well in the beginning of their Defcon talk.

In fact, we’ll use one of the attack techniques discussed in their talk as an example as we walk through our detection sprint process.

GCPloit, a python-based red team tool built by the Defcon presenters, serves to exploit potential imbalances in a given GCP environment. (In fact, cloud red team tools are great starting points to build an initial suite of threat detections). Specifically, the tool takes advantage of the ability to impersonate service accounts – a dangerous, yet common privilege in GCP.

Picking apart the code for GCPloit reveals the specific gcloud commands used to list available service accounts, deploy cloud functions attached to each available service account (necessary to expose the service account credentials), and ultimately capture the credentials to each service account.

From there, the attacker can use the service account credentials to impersonate even more accounts, or use the new privileges gained to continue moving towards their goals in a variety of other ways.

As discussed in the Defcon talk, it’s possible for an attacker to gain access to multiple GCP projects through this process.

Now it’s time to see if we can build a successful detection based on this functionality.

Evaluate

Once we understand GCP IAM and some of the most impactful security weaknesses at hand, we build and evaluate detections in our testing environment.

With cloud detections, one part of our evaluation process includes determining if the detection is looking for attacker behaviors or simply detecting risky configuration changes (although the lines between the two are often blurred).

There’s no shortage of open source detections for risky cloud configuration changes. There’s also a whole market that specializes in these (Cloud Security Posture Management anyone?).

Our goal for this sprint was to find evil. Plain and simple. We were all in agreement on which attack paths are the scariest and remain readily available for an adversary (malicious service account impersonation… begone you dastardly monster…).

Multiple GCP detection sprints have resulted in detection ideas for a variety of malicious service account impersonation behaviors. Here’s a few examples:

- Burst in cloud function deployments: Is an attacker programmatically capturing service account credentials?

- Unusual cloud functions: Are cloud function deployments unusual for this user?

- Service accounts making org level policy changes: Is an attacker leveraging stolen credentials to gain additional access in the environment?

Back to the detection we’re focusing on – a service account impersonation detection.

Remember, cloud functions deployments can be used by the attacker to capture new credentials while impersonating a service account. So what data do we need to effectively write a detection for this?

Let’s hone in on what native log data exists in GCP.

Finding the right sources of evidence

GCP provides several log sources, but not all translate into effective detection sources.

- System Event audit logs: Capture machine data, but not user actions.

- Data Access audit logs: Record granular resource access (typically not enabled due to high volume).

- Admin Activity audit logs: Capture control plane changes (including user actions).

For these particular attacker behaviors, the Admin Activity log includes the evidence we need to write our detections.

Since these logs capture configuration changes to resources in GCP, they give us insights into the events we want to correlate when searching for evil. For example, when a cloud function is deployed, we can track this activity through the Admin Activity audit log.

Create

Now, it’s time to write some detections.

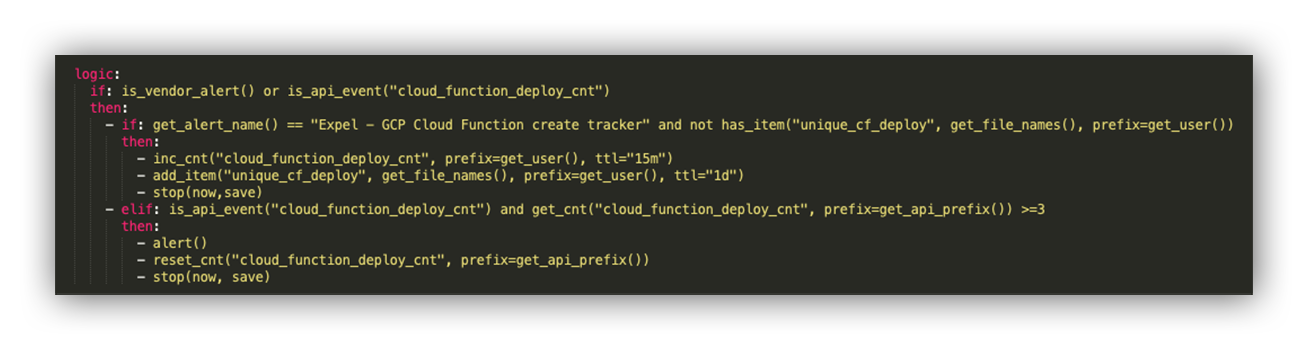

Meet Josie, our own python-based detection engine we use here at Expel. Let’s walk through what service account impersonation detection logic looks like for Josie to evaluate.

Snippet of Expel detection logic relating to bursts in cloud function deployments

At a high level, this logic is watching for any instance of a cloud function deployment, and recording the user responsible for the event. Josie will remember the user responsible for this action for 15 minutes. If the same user deploys over three unique cloud functions in that 15-minute window, Josie will generate an alert for analysts to look into the activity.

To make sure this detection logic is sound, we’ll deploy this detection in a draft state to allow us to see how it performs. Based on its performance, we may decide to adjust the detection threshold or suppress users associated with frequent/programmatic cloud function deployments.

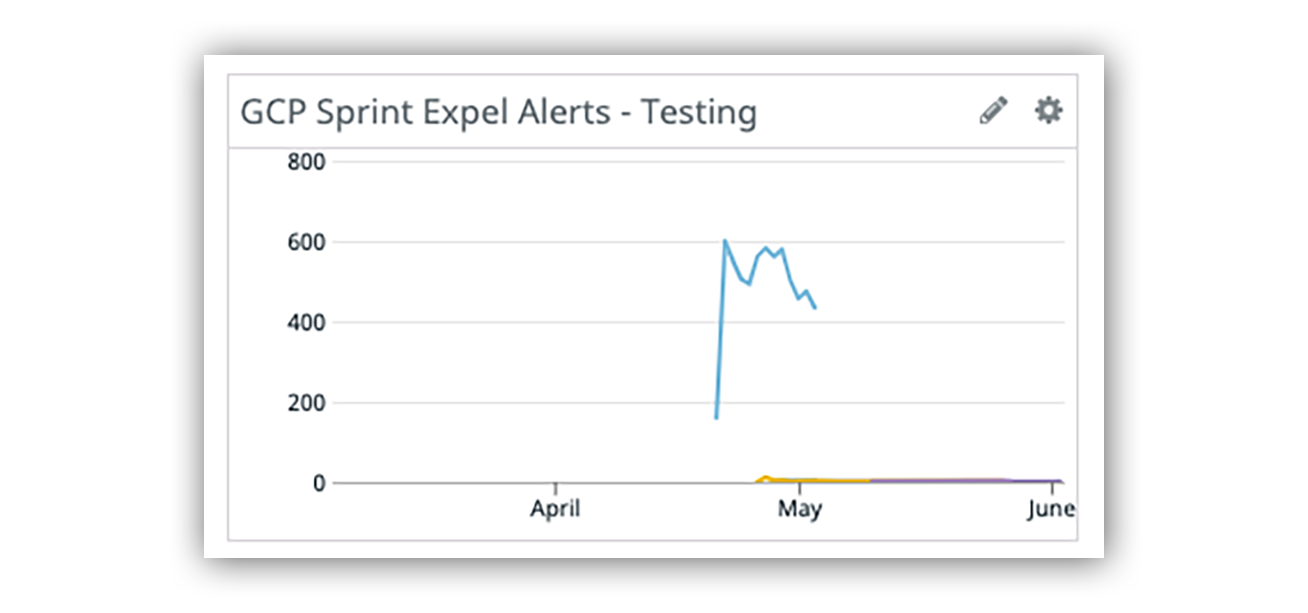

Once we’ve written a detection and released it into our testing environment, we like to track the detection’s performance in Datadog to measure patterns and general volume. The Datadog visual below makes it pretty obvious to tell which detections needed some work and when they were dialed in.

Datadog graph tracking the volume of Expel’s GCP alerts in the testing/development phase

After making tweaks to the draft detections to adjust for volume, and ensuring they detect a real threat scenario, we prepare to release the detections into production.

Preparing for release means ensuring that the detection has a strong description, suggested triage steps, references and decision support (through the help of our automated robot Ruxie). The goal is to have our analysts set up for success if the detection should trigger an alert.

One final thing – any cowboy can shoot from the hip and come up with novel detection ideas. What’s more challenging is pressure testing the investigative process for those detections.

If you can’t investigate it easily, then it’s probably not a good alert to surface up to an analyst. With that said, any good detection should come prepackaged from the detection engineering team with some investigative questions attached to help triage any generated alerts.

Appreciate

Detections are out, queue the jazz hands.

We still like to keep tabs on the newly released detections, however, and have Datadog monitors in place should any of the detections start behaving erratically. In good Scrum fashion, we like to retro our sprint process and look for ways to get better.

After iterating upon our detection work and seeking to protect against both new and known threat scenarios, we’re confident in our ability to tackle GCP attacks. Using service account impersonation as a shining example, our coverage for this threat scenario and other kinds of evil in GCP is now significantly stronger.

Sprinting ahead

When Expel started detection work on GCP, it was a whole new world to explore. Through detection sprints like this one, we can learn how IAM works in GCP (and other cloud platforms), grow our understanding of the attack surface as a team and build up a meaningful detection strategy.

This is a reusable process that we’ve found to work well no matter what tech is targeted. So if you find yourself in a similar situation (staring down a new area of risk with little detection inspiration to start from), following a similar iterative process of discovery, experimentation and keeping a close eye on your resulting detections should serve you well.

As for us – we’re on to the next one (and we’ll keep sharing along the way)!