Current events · 4 MIN READ · BRANDON DOSSANTOS · NOV 13, 2023 · TAGS: tech stack

When it comes to hacker activities post-compromise, inbox rule manipulation can be an attacker’s Swiss Army knife. Check out what we’ve learned from analyzing a dataset of true positive Microsoft 365 Outlook rule events.

Attackers interested in the contents of a user’s inbox, even if it’s not the actual treasure chest, it can be a good place to start hunting for the map (and maybe even the keys). Attackers especially love it when they can automate what happens to a particular inbox message. And compounding the issue is the fact that most people generally trust the messages they receive (unless they exhibit some overtly suspicious characteristics).

Inbox rule manipulation isn’t a novel attacker technique, but it can be quite difficult to accurately alert on since inbox rule creation and management exist for valid reasons. Here we cover some of our own research on how to spot high-fidelity inbox manipulation tactics.

Understanding an Outlook rule

First, let’s go over how an inbox rule is structured in Microsoft 365 (M365) Outlook. At a high level, an Outlook rule can consist of a name, keywords, action, and a folder destination. There may be some other elements, but for the most part, we’re interested in these four parameters.

- Name: visual label for the rule

- Keywords: words or phrases that trigger an action

- Action: what the rule will do to the message when matched

- Folder: the name of the folder where the messages are stored

A user can configure a rule with many different parameter combinations. Here are some valid rule configuration use cases:

- Delete or redirect spam messages

- Organize inbound messages to a folder for viewing later

- Mark specific messages as read

How attackers use Outlook rules

Given the above examples, you’re probably already thinking of the many ways rules can be exploited. What may not be obvious though is that an attacker can create a rule after they compromise the system they targeted. Why? Shielding email messages that notify or confirm the user of changes to critical systems keeps that user blind to the changes the attacker is making, helping them move around undetected, which is why we see this technique used so frequently.

Here are some examples of how a malicious rule enables them:

- Persistence TA0003

- Forward multi-factor authentication (MFA) codes or links

- Defense evasion TA0005

- Hide emails notifying the user of anomalous account behavior

- Collection TA0009

- Forward sensitive documents such as invoices, docusigns, and payments

- Lateral movement TA0008

- Proxy phishing emails to other users and hide the tracks

- Impact TA0040

- Delete any new messages

Detecting suspicious rules

First, we’ll need to consider the building blocks for the detection. In this example, we’ll detect Outlook rules.

The logs we’ll process are M365 Exchange audit logs, specifically with the “New-InboxRule” operation, which creates a new rule, and “Set-InboxRule”—which modifies an existing rule. Then we proceed to normalize the rule parameters, the source IP address, and the user’s ID.

Now the event is ready to process through Expel’s detection engine.

If we alert on every raw event, our analysts will be flooded with false positives. So there needs to be some consideration of what suspicious and benign emails might look like.

After digging through open-source intelligence, we find this technique for tuning the detection to focus on real malicious examples. For example, we should raise an eyebrow if keywords such as “invoice,” “payment,” “w2,” or “deposit” show up in the filter parameters, or when all emails are deleted. Over time, we tune the detection to trigger on higher-fidelity features—as our analysts triage incidents, we develop a feedback loop to improve this detection’s efficacy.

Analyst decisions become valuable data points

As Expel security operations center (SOC) analysts triage new alerts, escalate incidents, or close false positives, our decisions are recorded as valuable data points. New methods and behaviors surface, presenting opportunities to improve the detection.

With a large dataset of true and false positive data points, we can make confident decisions on which parameters are the most suspicious, allowing us to adjust the severity to find evil faster.

High-fidelity patterns

By reviewing previous triage history, we can plot out the predominant features in cyber incidents. Below are some examples of patterns found in true positive Outlook rule alerts:

| Feature | Examples |

| Suspicious Rule Names

“Obfuscates the existence of the rule” |

|

| Suspicious Actions

“Manipulates the state of the message” |

|

| Suspicious Keywords

“Matches on interesting messages” |

|

| Suspicious Folder

“Send the message to an unexpecting folder. Must be accompanied with a MoveToFolder action” |

|

Individually, these parameters may not pose threats, but when configured together they can lead to unauthorized manipulation.

Now let’s look at some actual examples.

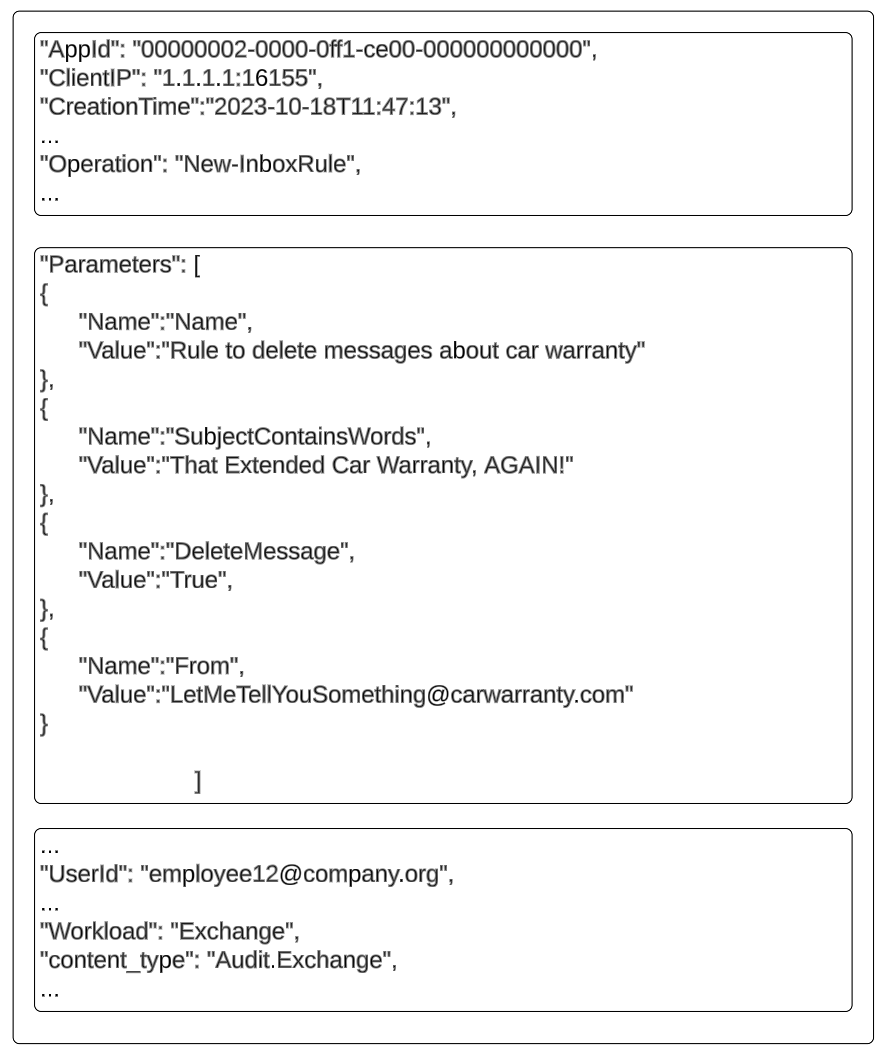

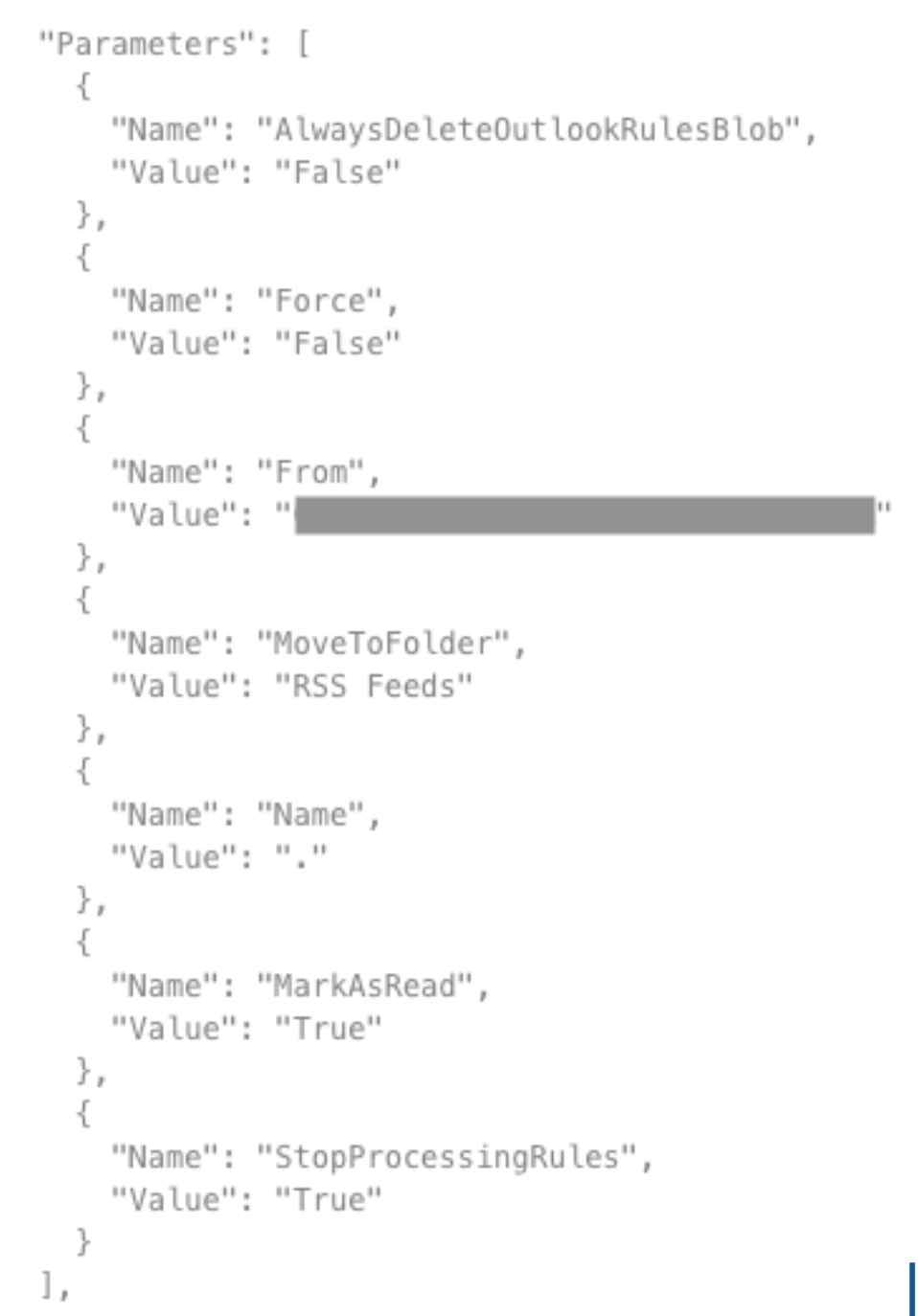

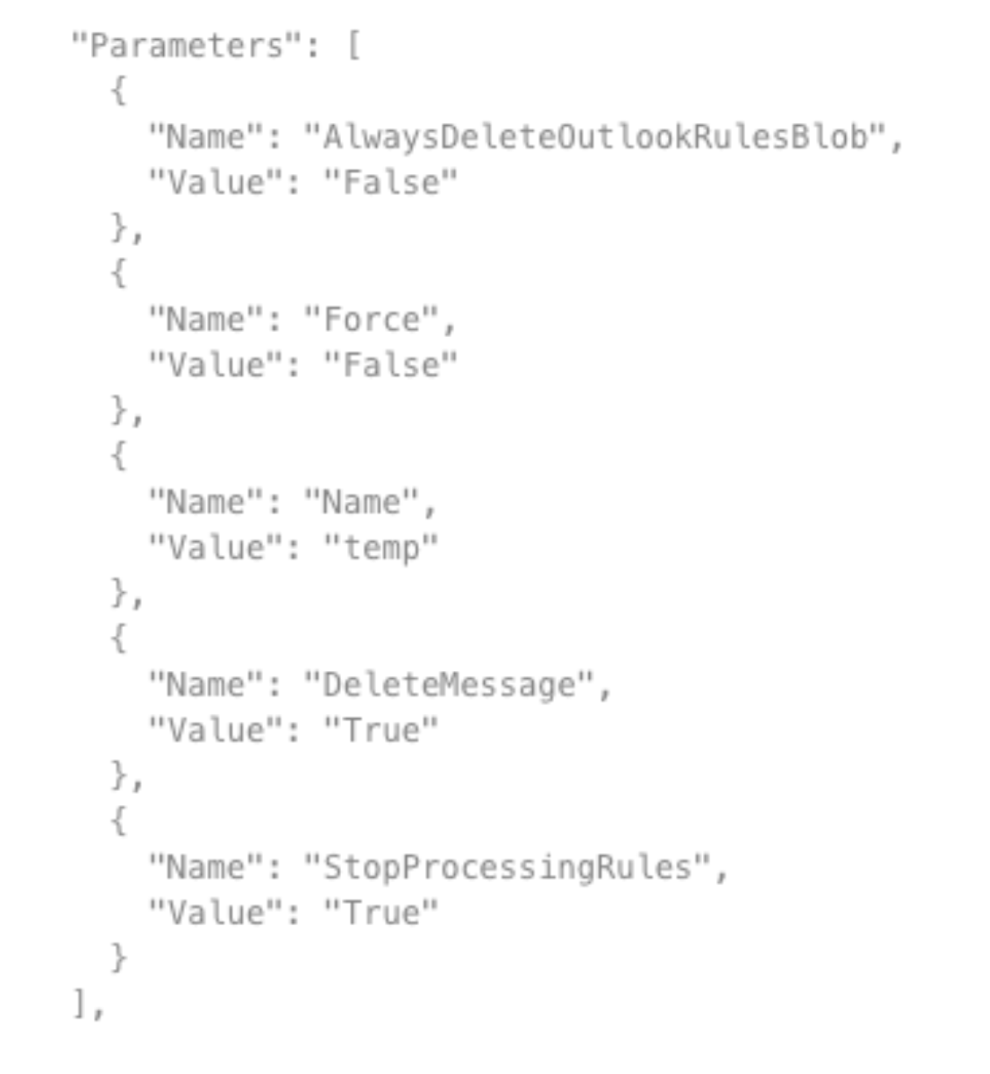

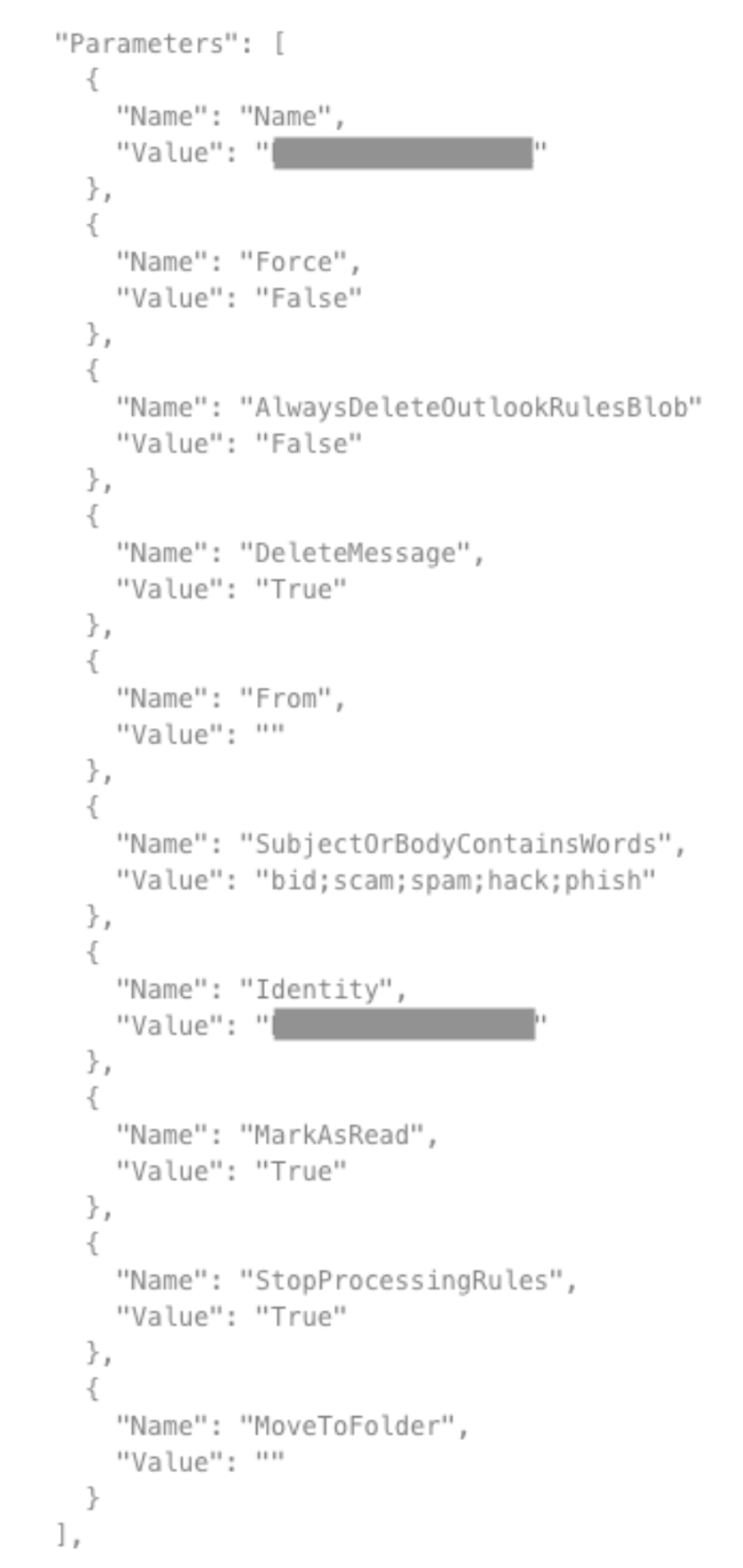

Real examples of malicious rules

To help visualize how these combinations may look in the wild, here are some real cases we found in incidents within the past year.

Hides emails in the RSS folder

Deletes all incoming emails

Destroys security related emails

Final takeaways

- Post-compromise, attackers tend to lean on inbox rules to further entrench themselves in the environment

- There are certain patterns to look for that may seem benign, but understanding how an attacker can use them will uncover a potential threat

- Single parameters alone aren’t enough to raise alarms, but combinations of them paint a more interesting picture worth digging into

For more information on some of the attacks we’ve seen recently, have a look at our Q3 2023 Quarterly Threat Report. If you have questions or comments, drop us a line.